'Don't let police carry armed drones or robots,' the Electronic Frontier Foundation warns

A non-profit claiming 'free speech rights' that the police should not deploy armed drones or robots, whether autonomous or remote-controlled, whether the weapon is lethal or not. The Organization and Electronic Frontier Foundation (EFF) has issued a warning.

Don't Let Police Arm Autonomous or Remote-Controlled Robots and Drones | Electronic Frontier Foundation

What EFF is concerned about is that even if it is a conditional introduction that 'operates only under limited circumstances,' the conditions will eventually be relaxed. In other words, it could eventually be used to crack down on legitimate protesters.

At first, it was completely a mystery what 'Stingray' was, but when the court record was released in 2014, it was discovered that it was a device that disguised a mobile phone base station and tracked it.

The actual state of the investigation method using 'Stingray' that collects individual identification information and location information of smartphones by pretending to be a mobile phone base station --GIGAZINE

Cops Use StingRay Phone Tracker Against $ 50 Chicken Wing Thief | eTeknix

https://www.eteknix.com/cops-use-stingray-phone-tracker-50-chicken-wing-thief/

Drones (unmanned aerial vehicles) were originally developed for military purposes, but quickly became operational by police and fire departments. The first case of a drone being used for surveillance purposes leading to arrest was reported in the United States in 2012. The drone in operation at this time is believed to be the unmanned aerial vehicle MQ-9 Reaper.

The nation's first arrested person comes out by monitoring operation of flying unmanned aerial vehicle --GIGAZINE

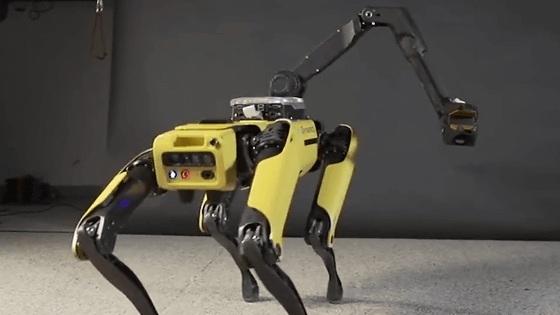

In 2016, 12 police officers were attacked in Dallas, killing 5 people, and after a lengthy shootout, police bombed the suspect with a robot-carried explosive. Naturally, the robot used at this time is used in a way that is 'different from its original purpose.'

Dallas Shooting: In An Apparent First, Police Used A Bomb Robot To Kill: The Two-Way: NPR

https://www.npr.org/sections/thetwo-way/2016/07/08/485262777/for-the-first-time-police-used-a-bomb-robot-to-kill

Recently, it is said that the development of an AI-enhanced autonomous drone that detects and detects people's screams in the event of a natural disaster is underway. However, EFF has expressed concern that this too would deviate from its intended use and would eventually be used by police to crack down on 'screaming protesters.'

Another problem with EFF is that the technology is dysfunctional. Police have already introduced technologies such as face recognition, gunshot detection, and automatic license plate reading, but all of them have 'false positives'. 'False positives' in these techniques call for unnecessary dispatch of armed police officers, leading to unjustified arrests and excessive violence on the ground. The situation is even worse if people of color are mistaken for being suspected of a crime. If such a dysfunction occurs in an autonomous armed robot that instantly determines 'whether to seriously injure and stop or kill' based on a program, the innocent civilian is at risk. Will be.

EFF also considers who will be responsible if a person is unduly injured by a robot or drone. Even today, it is clear that it is difficult to 'blame the police for unfairly injuring or killing civilians,' and even more so for robots with automated decision-making.

In addition, there is a risk of being hacked, whether autonomous or remote. Already, police surveillance cameras have a 'track record' of being hacked.

The EFF said, 'Excluding technology from the hands of the police is much more difficult than preventing it from being deployed in the first place. That's why we should now promote legislation banning police from deploying this type of technology. That's it. '

Related Posts:

in Hardware, Posted by logc_nt