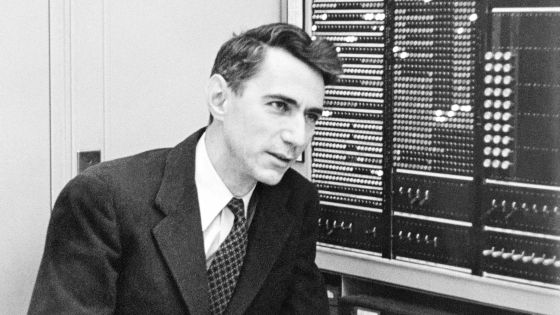

What is 'information' defined by Claude Shannon, the father of informatics?

Jane Austen's concept of information

https://www.cs.bham.ac.uk/research/projects/cogaff/misc/austen-info.html

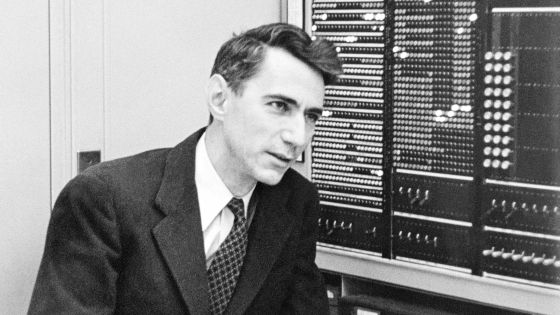

Shannon developed ' mathematical theory of communication ' that expresses information with various meanings by binary values of '0' and '1', and devised a concept called 'entropy (amount of information)'.

What is the achievement of Claude Shannon, the father of information theory? --GIGAZINE

According to Mr. Slowman, the 'amount of information' defined by Shannon is different from the 'scale of the phenomenon' that people imagine. For example, the two sentences 'caterpillar ate grass' and 'asteroid destroyed the island' imagine phenomena of different scales, but both say ''noun' is'noun'to'verb''. Since they are composed of the same type of words, it can be said that 'the amount of information about'word types'is equal' when applied to Shannon's theory.

In Shannon's information theory, when information is composed of constant signals, the number of signals determines the amount of information.

In addition, 4 consecutive 2 types of signals can represent 2 4 = 16 kinds of information, while 4 consecutive 4 kinds of signals can represent 4 4 4 = 256 kinds of information. In this way, according to Shannon's theory, the amount of information increases as the length and type of signal increase. At this time, if the enumeration of alphabets such as 'zzxxjalp' and 'azbycxxyrk' is applied to Shannon's theory, it can be said that 'azbycxxyrk', which has a large number of characters, has a larger amount of information. However, neither is a meaningful English word.

The two words 'bird' and 'crow' contain four of the 26-letter alphabet and have the same amount of information in Shannon's information theory. For this reason, 'Tweety is a bird ( Tweety is a bird),' 'Tweety is a crow (Tweety is a crow)' two statements that are have the same amount of information in Shannon's information theory, 'bird' The word 'Tweet is a bird' can be deleted intuitively because the word 'Crow' can delete the information 'It is a bird other than a bird'. Mr. Slowman points out that the sentence 'yes' feels more informative.

'In Shannon's information theory, the amount of information is defined by the number of signals that can be combined with the number of signal species, so what we call' meaning 'and' content 'is related to Shannon's information. There is no such thing. '

Mr. Blizzard also cites the word 'information' as an example in ' Pride and Prejudice ' published in 1813 by novelist Jane Austen, who was active in the 18th and 19th centuries. 'Don't confuse' information 'with aspects such as' meaning 'and' content 'as used in Austen's novels with' information 'in Shannon's theory of information.'

Related Posts:

in Science, Posted by log1o_hf