How does Facebook do 'test exams'?

A 'test' is performed to test the effect or performance of something, but since the test itself may be good or bad, or the test may become obsolete over time, an 'evaluation of the test itself' is performed. Sometimes it is necessary. Facebook, which has one of the largest platforms in the world, explains on its blog about the 'test itself' conducted in-house.

Probabilistic flakiness: How do you test your tests? --Facebook Engineering

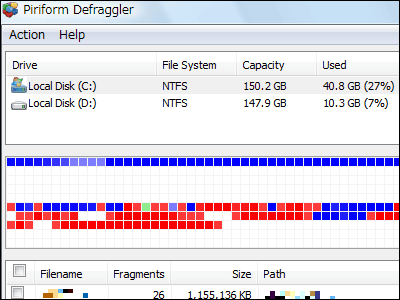

Facebook's code base is changing day by day as engineers implement and optimize new features in the app day and night. Although Facebook operates an automatic test to detect such deterioration of the code base, there was no 'automatic test to check whether the automatic test has deteriorated over time'.

'Testing the test itself' is important to verify the reliability of the test itself, but according to Facebook, academic research on the test itself is 'Which test has a defect?' 'Which test is reliable. It is said that he has emphasized only 'Is it?' Facebook designs test tests to find out 'how many defects there are' based on the idea that any perfect test will have some defects if it is executed in reality. Therefore, we have constructed the concept of 'Probabilistic Flakiness Score (PFS)'. PFS is a numerical value of the probability that an error will occur due to various factors such as network failure.

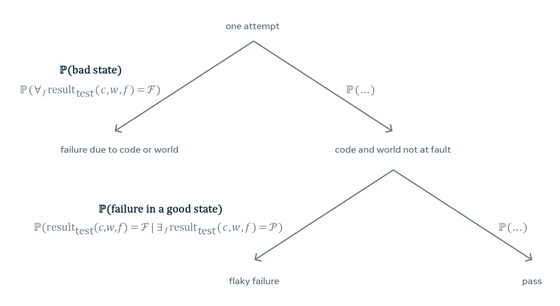

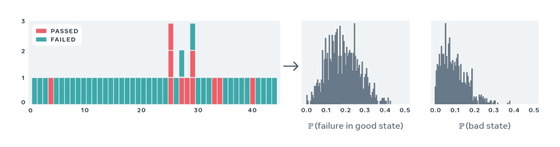

If the test is performed 'once', there is no option but to receive the test results at face value, and it is impossible to verify the reliability of the test itself. However, if a particular test is run multiple times, the distribution of test results can reveal the inherent bias of the test. Facebook depends on two probabilities that the test fails: 'the probability of failure due to the code under test or the test environment' and 'the probability of failure if the environment itself is good (= PFS)'. I thought there was. When designing the test itself, it is necessary to ignore the 'probability of failure due to the code under test or the test environment' and only find the PFS.

Facebook is a statistical modeling environment based on the

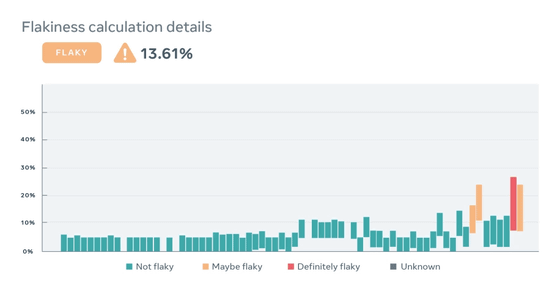

According to Facebook, by continuing to measure PFS since 2018, it has become easier to identify the cause of the problem in the test itself by creating an environment where you can detect 'when a big change occurred in PFS'.

Facebook states that as of 2020, PFS has gained internal credibility, and PFS is being used to make decisions such as 'when to discontinue quality improvement efforts.'

Related Posts:

in Web Service, Posted by darkhorse_log