What GitLab learned from running Kubernetes for a year in the face of challenges

What we learned after a year of GitLab.com on Kubernetes | GitLab

https://about.gitlab.com/blog/2020/09/16/year-of-kubernetes/

From the beginning, GitLab's web service 'GitLab.com' was operated on a virtual machine (VM) in the cloud using the configuration management tool Chef and the self-managed Linux package provided by GitLab itself. That thing. In order to know the inconvenience and joy of users who host GitLab by themselves, the version upgrade of GitLab.com adopted the same method as general users, such as deploying to each server by CI pipeline. However, as the service grew, it couldn't meet its scaling and deployment requirements, so GitLab decided to move to Kubernetes.

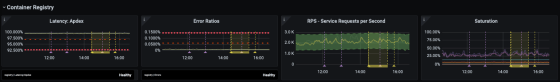

At the time of writing the article, GitLab.com is running on a GKC cluster within a single region. After resolving the high dependency on NFS, which was an obstacle during migration, we routed traffic to the service by functions such as 'website', 'API', 'git remote repository by SSH / HTTPS', and 'registry'. The Helm chart provided by GitLab is used for deployment. GitLab.com itself management is not done on the GitLab.com but, ' gitlab-com ,' ' gitlab-helmfiles ' ' gitlab-com-infrastructure code to three of the repository' is mirrored.

It is said that GitLab has learned the following five things by operating Kubernetes for one year.

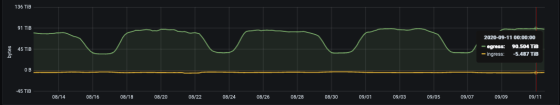

◆ 1: Increased cost for inter-zone communication

Each region of GCP consists of an 'Availability Zone (AZ)', which is a collection of several data centers, and communication between AZs is charged. In the conventional configuration, communication that was not charged within the local host of the VM is performed between pods running in multiple AZs when using Kubernetes, so it may be charged. Especially in GitLab, the amount of downlink traffic reaches 100 TB only in the Git repository, so which traffic is charged is an important factor. Region clusters where pods are generated in multiple AZs are highly available because they utilize multiple zones, but GitLab is considering migrating from region clusters to single zone clusters to reduce costs. is.

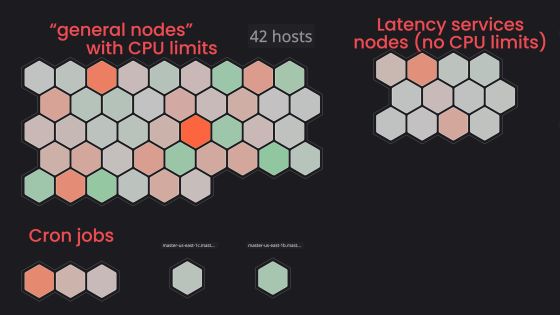

◆ 2: Adjustment of pod requests and limits

The first challenge GitLab experienced when migrating to Kubernetes was that a node's memory constraints pushed a large number of pods to another node. For applications where memory usage increases over time, setting the requests low and limits high will allow the node to have sufficient resources over time, even if sufficient resources are available at the pod generation stage. You may run out of memory. In order to avoid this, it is necessary to set the requests value high and the limits value low so that there is a margin in the resources allocated to the pod. You can find out more about requests and limits by reading the following articles.

◆ 3: Metric collection and logging

A major change in the infrastructure sector during the year of running Kubernetes was improving the way

◆ 4: Traffic switching to new infrastructure

GitLab has a development stage called ' Canary ', where new features and changes are first deployed as a product. The Canary stage of GitLab is mainly used by projects inside GitLab to check if it meets SLO. Even in the infrastructure migration to Kubernetes, first of all, only the traffic from the internal project was switched to the Kubernetes cluster, and after confirming the operation, other traffic was gradually switched. When moving traffic between old and new infrastructures, it's important to be able to easily roll back the environment for the first few days.

◆ 5: Pod that takes a long time to start

When we started migrating jobs that needed to be processed quickly and scaled quickly to Kubernetes, the challenge was that the Jobscheduler Sidekiq service had a pod startup time of as long as two minutes. Kubernetes has a feature called ' Horizontal Pod Autoscaler (HPA) ' that scales the number of pods according to the load of pods, but while this HPA works well for traffic growth, it is a particularly needed resource. In the case of pods that are not even, CPU resources may be exhausted before scaling. Currently, it seems that the pod startup time of the Sidekiq service has been improved to about 40 seconds on average by giving a margin to the number of pods and scaling down later while paying attention to SLO.

After migrating to Kubernetes, GitLab has benefited a lot in terms of application deployment, scaling, and resource allocation. Through improvements to the official Helm chart, he says he can also benefit the average user who hosts GitLab himself.

Related Posts:

in Software, Web Service, Posted by darkhorse_log