What are the features of Google's new natural language processing AI 'T5'? You can actually face off with AI and quiz

Google's new natural language processing model `` T5 '' is a machine learning model using `` transfer learning '' that diverts a trained model in one area to another area, and has the highest score in many natural language processing benchmarks Leaving. Google explains the features and abilities of the T5, and there is also a website that can actually confront T5 with a quiz.

Google AI Blog: Exploring Transfer Learning with T5: the Text-To-Text Transfer Transformer

T5 trivia

https://t5-trivia.glitch.me/

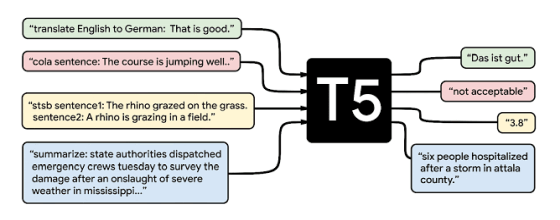

T5, a new natural language processing model, has appeared in the paper ' Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer ' published by Google. Similarly, BERT developed by Google can output only data that humans can not understand as a language, such as class labels and input ranges, whereas T5 re-performs natural language processing tasks so that inputs and outputs are always in text format. It is said that it can flexibly handle machine translation and document summarization.

Supporting the performance of T5 is a data set called '

c4 | TensorFlow Datasets

https://www.tensorflow.org/datasets/catalog/c4

In developing T5, Google researched how to implement a natural language processing model based on transfer learning and found the following insights. The size of the C4 data set used for insight and learning, such as the following, has led to the highest score in many benchmarks.

Model structure: Generally, the performance of the encoder / decoder model is higher than the model of the decoder alone.

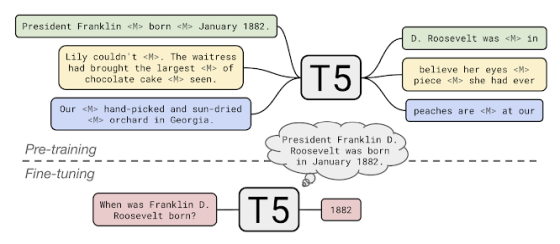

Pre-learning goal: Fill-in-the-blank learning using incomplete sentences with blanks has the highest performance in terms of computational cost.

Unlabeled datasets: Prior training with small datasets can lead to harmful overfitting.

Learning strategy: Multitask learning requires careful consideration of how often each task is learned.

Scale: Compare the size of the model used for pre-learning, the time required for learning, and the number of models used for ensemble learning, and determine the most efficient way to use limited computing resources.

T5 has two innovative capabilities, one of which is 'Answering unknown questions.' T5 is pre-trained, given questions and background to answer questions, and trained to take that background into account. For example, given the question 'When did Hurricane Connie occur?' And an article about Connie on Wikipedia, T5 was trained to find the date in the article, 'August 3, 1955'. T5 is a natural language processing model that relies on one's 'knowledge' to answer questions based on only data input during pre-learning without accessing external data.

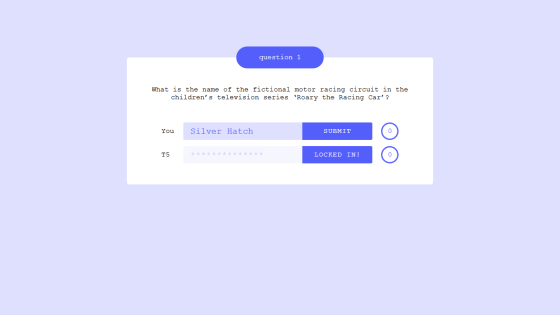

By accessing the following URL, you can actually have a quiz showdown with T5.

T5 trivia

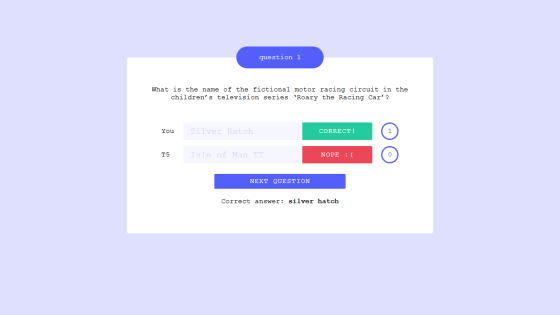

Asked, 'What is the name of the fictitious circuit that appears in the Roary the Racing Car ?' The answer is 'Silver Hatch' that combines the real British circuits ' Silverstone ' and ' Brands Hatch ' ...

T5 incorrectly answered '

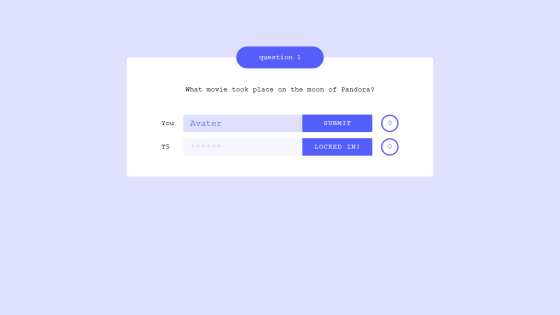

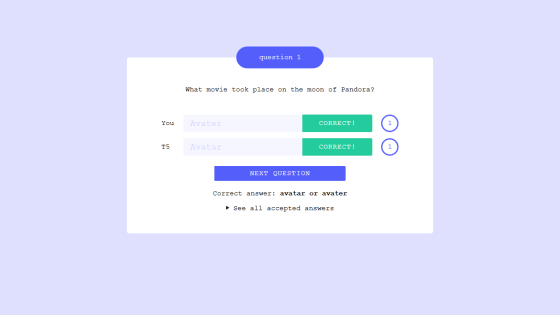

The answer to the question 'What is the movie set on Pandora?' Is ' Avater '.

This has been the correct answer.

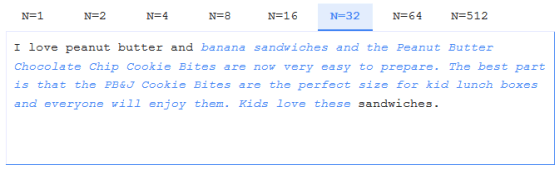

The second innovative capability is 'Solving fill-in-the-blank problems that can respond to changes in blank size.'

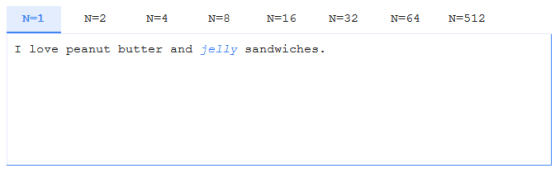

In the N = 1, 'I love peanut butter and jelly sandwiches. ( I sandwich of peanut butter and jelly now sentence that I like).'

At N = 32, `` I love peanut butter and banana sandwiches and the Peanut Butter Chocolate Chip Cookie Bites are now very easy to prepare.The best part is that the PB & J Cookie Bites are the perfect size for kid lunch boxes and everyone will enjoy them. Kids love these sandwiches. (I love peanut butter and banana sandwiches, and peanut butter chocolate chip cookies are now very easy to prepare. Peanut butter and jelly cookies can be used in a child's lunch box. A good size is the best and I think everyone will enjoy it. Children love those sandwiches very much.) ' Longer sentences can be generated by selecting larger blanks.

The source code of T5 is published on GitHub , and anyone can use it.

GitHub-google-research / text-to-text-transfer-transformer: Code for the paper 'Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer'

https://github.com/google-research/text-to-text-transfer-transformer

Related Posts:

in Software, Posted by darkhorse_log