Developer explains the secret of Adobe's `` Adobe Sensei '' that realizes the awfulness `` Is image search so far? ''

In recent years, artificial intelligence (AI) has been used in many places for software development, and it can be used

Adobe Sensei

https://www.adobe.com/en/sensei.html

The man on the left is Scott Prevost , vice president of engineering at Adobe Sensei, and the man on the right is Kreg Carrieka from the US PR team.

Adobe Sensei has a different purpose from so-called “general AI”. Adobe has decades of experience in the field of image and video processing, and Adobe Sensei is an AI specializing in this “specialty”.

“Every AI model must have a specific use case in mind,” Prevost said. From past experience, Adobe has unique data about how people are making works that no other company has. Other companies may have image data as “results”, but because Adobe has data on how “processes” are performed, AI cannot be done by other companies. It seems possible to train.

“Fully developed science and technology is indistinguishable from magic,” said science fiction writer

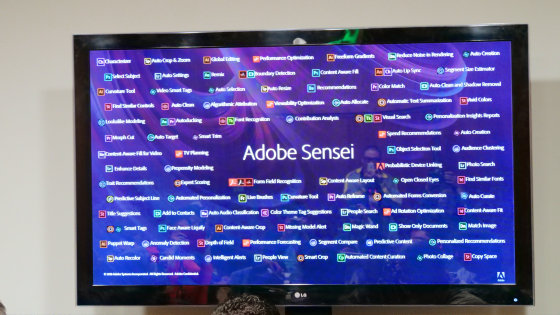

Adobe Sensei is a generic term for AI in Adobe software. Broadly speaking, it is active in three areas: “Creative Intelligence”, “Content Intelligence”, and “Experience Intelligence”.

“Creative Intelligence” aims to support creativity. Previous research has shown that half of the time people spend doing creative work is actually spent on non-creative tasks. Helping with this 'non-creative task', such as duplicative work and simple work, is creative intelligence.

“Content intelligence” deeply understands content at a conceptual level and automatically performs tagging, evaluation, and addition to assets. The third “Experience Intelligence” optimizes personalization of the customer experience. They will identify how marketing measures contribute to the achievement of goals and improve the quality of customer experience.

Adobe Sensei technology is built into dozens of Adobe software. For example, “

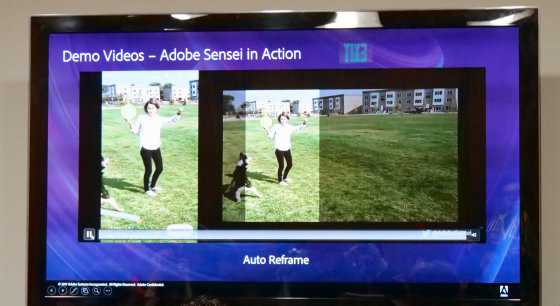

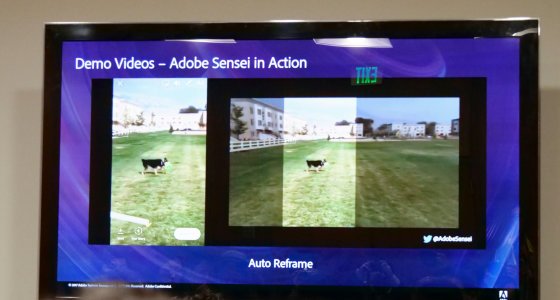

Until now, Adobe has focused on image AI, but in recent years it has focused on video AI. In particular, Prevost and others spent a lot of time on the auto-reframe function in Adobe Premiere Pro CC. When editing content created on the premise of 16: 9 into a square or vertically long image, if the center is simply cut off, the subject will appear unnatural, but auto-reframe will detect the moving subject and make it natural. It will reframe in the form. For example, in the case of the following landscape image, first, only the periphery of the woman is cut out to make a portrait image.

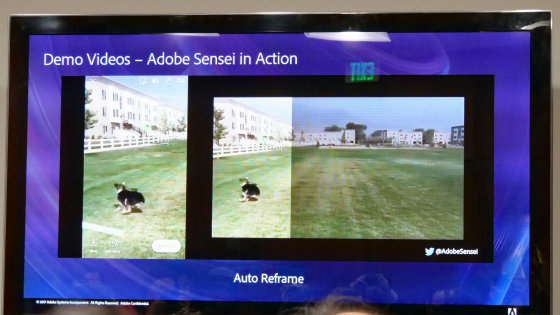

When a woman frames out of the screen, she tracks the dog that runs around and cuts the screen.

The part of the screen that you cut out changes as the dog moves.

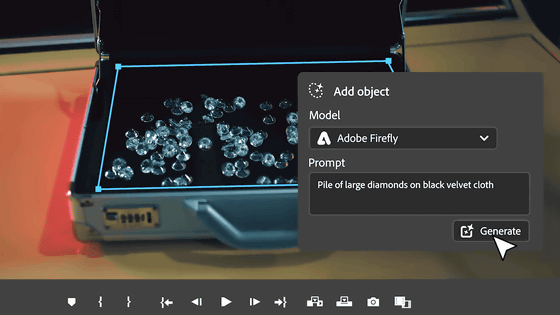

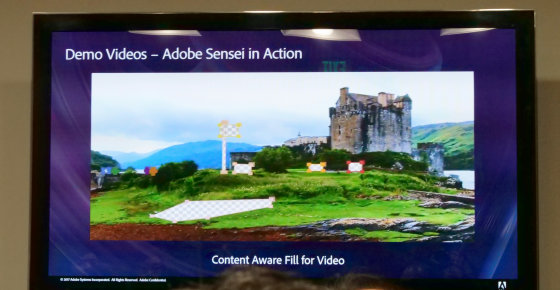

Also, fills according to the content can be used in video editing. It takes a lot of time to manually edit each frame, but if you use a fill depending on the content, you can delete unnecessary parts from the frame ...

By adding a fill layer, I was able to erase unnecessary things in no time. Adobe Sensei's “Creative Intelligence” is a solution that instantly solves the “duplicate and time-consuming tasks” of creative work with a single button. By shortening the time spent on non-creative work, it will be possible to spend more time on tasks that require creativity.

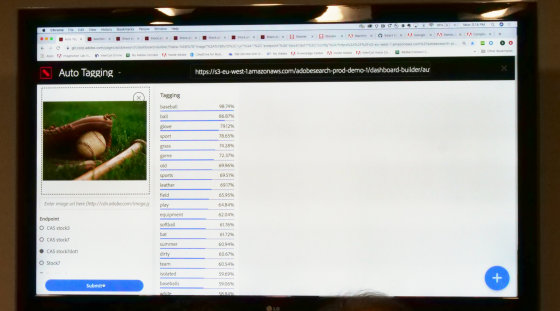

In addition, machine learning is used in Adobe Stock. The screen called “Auto Tagging” in the image below is not an Adobe product, but a visualization of how auto-tagging is performed.

More than 100 million materials can be used with Adobe Stock, but a process called “search” is required for use. For this reason, it is necessary to tag search keywords with the image that will be the material. And in order to perform such “automatic tagging”, it is necessary to understand the concept of over 40,000 software.

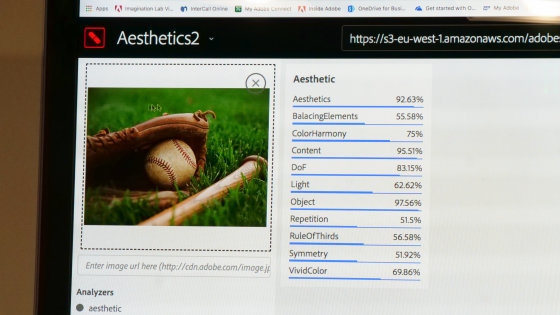

For example, when you uploaded a photo showing a baseball glove, bat, or ball, “baseball: 98.74%”, “ball: 86.87%”, “globe: 79.12%”, etc. were displayed immediately under “Tagging”. In addition, it understands advanced 'concepts' other than objects such as sports, play, summer, and team, and shows that tagging is performed.

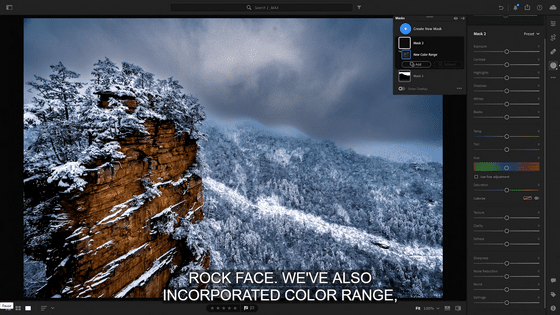

In addition, in the analysis of “Aesthetic”, element balance, color harmony, content, depth of field, light, trisection

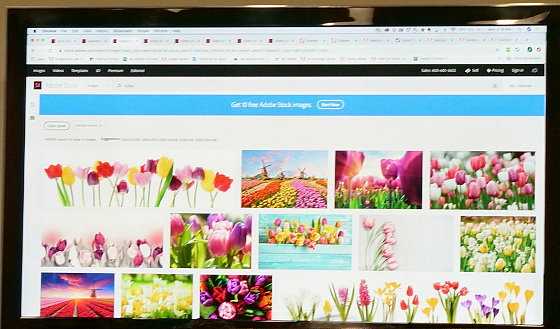

Adobe Stock uses these analysis results. When you search for tulips in actual Adobe Stock, the search results will be displayed like this ...

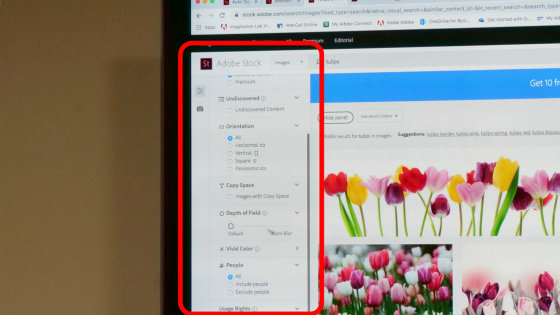

It is possible to search with more detailed conditions. From the panel, you can select the orientation of the image, depth of field, saturation, and whether to include people.

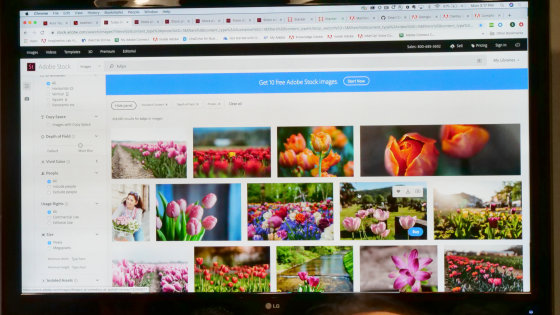

If you specify the depth of field and arrange only the more blurred images in the search results, it looks like this. Without machine learning, this technology could not be realized.

Even sunset photos ...

You can specify finer colors such as those with more vivid colors or softer colors.

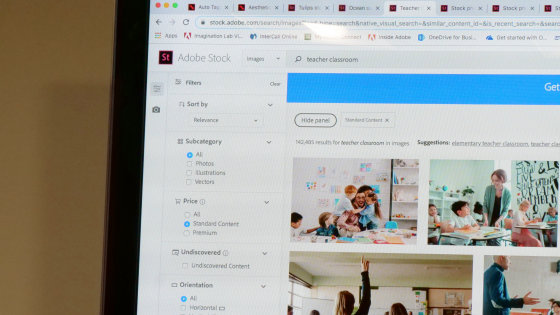

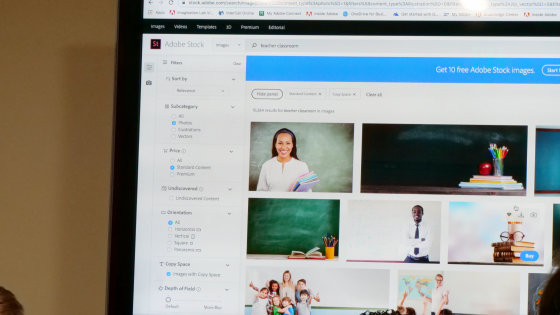

Furthermore, when you want to use an image of a person using a textbook as a material, search for “classroom” or “teacher”. However, these can be used as images, but they are difficult to use as material due to their small margins.

Then, when searching using 'Copy Space', images with margins for entering text are displayed in the search results. Adobe Sensei makes it possible to understand 'what an image with space for text is' through 'supervised learning' training that labels and learns data.

In addition, various similarities can be recognized when searching for similar images. Even if you say “similarity” in a word, the similarity of composition is important for some people, and the similarity of color is important. For example, if you search for images of gazelle based on 'color similarity', it looks like this.

If you choose compositional similarity, the colors will vary, but you will see a series of images with one gazelle in the middle of the screen.

Furthermore, the subject can be changed from gazelle to “deer” with the same composition.

It's a little confusing, but the screens behind Prevost and Carrieka have pictures of bright yellow and light blue houses.

It is possible to extract only the color from here and find a sunset image of the same color with the keyword “ocean sunset”. In this way, powerful image search is possible with various similarities.

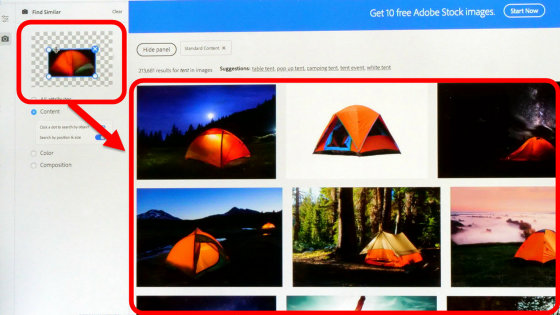

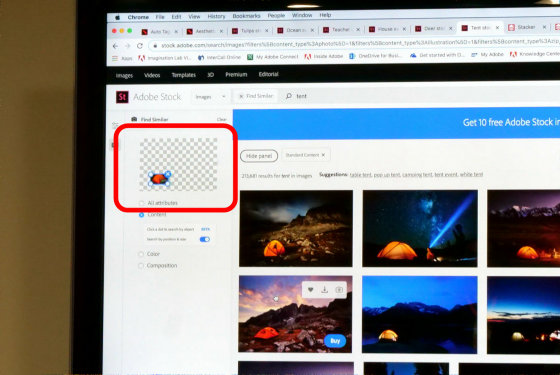

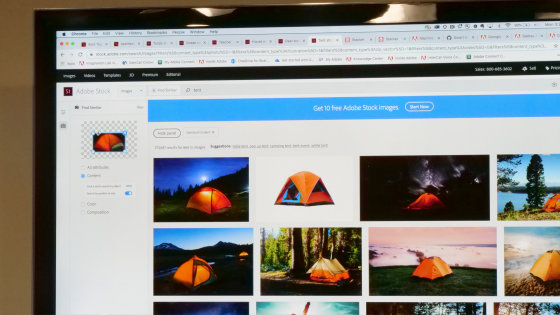

In addition, when you want an image of a tent, you can specify the composition in detail. If you adjust the size and position of the tent in the space on the left side of the screen, the image will appear in the search results. For example, the image below specifies an image with a slightly smaller tent in the lower right corner of the screen.

If you want an image with a tent in the lower left of the screen, just drag and drop it.

You can also change the size of the tent that occupies the screen. In real time, it is possible to narrow down the image by specifying the details.

As mentioned above, Adobe Sensei is used in many Adobe products, but there is an approach of both product team and applied research team on how to use Adobe Sensei. Both staff members have ideas based on their knowledge. Prevost said that creating a solid framework for the company creates a place where everyone can collaborate, allowing the technology cycle to run well from development to release.

Related Posts: