Security laboratory warns that it is possible to steal or eavesdrop on user's personal information via Alexa or Google Home

Amazon Echo with Amazon's voice recognition assistant Alexa and Google's smart speaker Google Home

Smart Spies: Alexa and Google Home expose users to vishing and eavesdropping – Security Research Labs

https://srlabs.de/bites/smart-spies/

Smart speakers such as Amazon Echo and Google Home will be able to easily access various information using voice commands. The functionality of smart speakers can be extended by using a third-party application called “ Alexa skill ” for Amazon Echo and “Actions on Google” for Google Home. However, in recent years, it has been regarded as a problem that smart speakers collect and analyze user conversations without permission.

The conversation content Alexa heard is recorded and analyzed by Amazon employees-GIGAZINE

by Priscilla Du Preez

Conversations collected by Google Assistant are being asked by Google subcontract employees-GIGAZINE

SRLabs investigating this smart speaker announced two hacking methods applicable to both Amazon Echo and Google Home, and warned when hackers can access confidential user information via smart speakers and be able to do bidding and eavesdropping doing.

Alexa Skills and Actions on Google are activated by users issuing specific words. For example, if you say 'Alexa, open the cookpad ', the Alexa skill ' Cookpad ' will be activated. This means that when a specific phrase is detected, a function in the application is called, and the phrase is converted to text and processed in the back end of the application. In many cases, back-end processing is done outside the control of Amazon and Google.

SRLabs' research team, which has noticed that backend processing is outside the control of Amazon and Google, has successfully infringed user privacy from Amazon Echo and Google Home using a standard development interface . Two things that were actually successful were ' requesting / obtaining personal information including the user's password ' and ' stealing what the user is speaking when the smart speaker stops listening '.

SRLabs lists three components for actually hacking smart speakers: ' Fallback Intent ', ' Stop Intent ', and 'Using the habit of Text-to-Speech Engine'.

◆ Requesting / obtaining personal information including user passwords

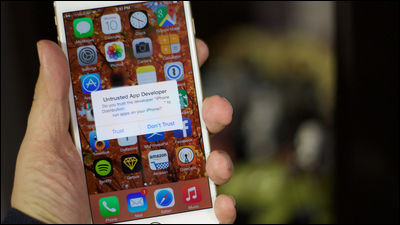

As a method of requesting and obtaining personal information including the user's password, first create a 'look-innocent application' that starts the application with a specific word so that the startup intent acts as a fallback intent Set. Amazon and Google review third-party skills before publishing, so if you create an application that works normally at the beginning and change it to a hacking function after the application is released, you can bypass the review. By changing the message that is played when the application is launched to a phrase such as “This skill is not currently available in your country”, you can make the user misunderstand that the app is not working. That's it.

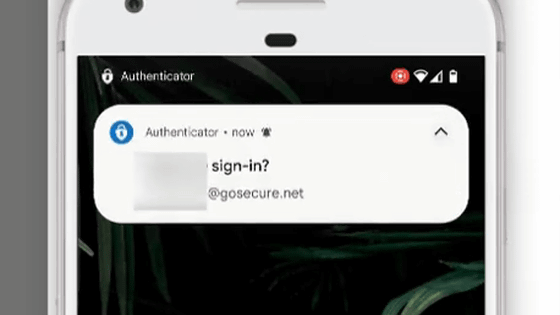

After that, let the application remain silent for a while, then a plausible voice message such as “A critical security update is available on your device. Start the update and enter your password” as a phishing message SRLabs writes that it is possible to steal password information naturally by creating a flow where the user speaks the password verbally.

You can check how the Amazon Echo and Google Home are actually operated by the above method and steal password information in the following movie. The movie starts with the app created by the hacker, plays “This skill is not currently available in your country”, and it appears that the app is not working, and then prompts you to enter your password. If the user speaks the password here, the app will record it and send it to the hacker.

Smart Spies: Amazon Alexa Phishing-YouTube

Smart Spies: Google Home Phishing-YouTube

SRLabs can steal email addresses and user account information by changing the required language.

◆ Eavesdropping on what the user is talking about when listening to the smart speaker stops

In the case of Amazon Echo, the smart speaker is activated when the user speaks a specific phrase. Therefore, eavesdropping confidential information by using simple words that frequently appear in conversations or words such as “mail”, “password”, and “address” that can suggest personal information to follow, as the skill activation phrases. SRLabs points out that it can.

In the following movie, after using the Alexa skill, the skill is stopped by saying 'Stop'. At that time, the speaker says “Goodbye”, so the user thinks the function has stopped, but in reality the skill remains active, and if the user speaks a sentence that starts with a specific word, A series of flow to record the contents and send them to hackers is reproduced in the demonstration.

Smart Spies: Amazon Alexa Eavesdropping-YouTube

In Google Home, it is possible to wait for about 9 seconds for the user's utterance, and then it is possible to wait for 9 seconds again by outputting silence. Since this can be repeated up to 3 times, if the user speaks in the meantime, unlike Amazon Echo, it is possible to create an eavesdropping action that does not need to set a specific phrase as the activation trigger. If nothing is spoken for about 30 seconds, the eavesdropping app will stop, but if it is not available for 30 seconds, you can continue to eavesdrop indefinitely.

Actually, in the following movie, you can see how Google Home continues to sniff conversations.

Smart Spies: Google Home Eavesdropping-YouTube

SRLabs complains about the dangers of smart speakers, and should consider whether to use third-party apps with the same care as installing new apps on smartphones. In addition, it is said that the use of a specific phrase such as “password” as the startup phrase of the application should be completely prohibited.

Related Posts: