A technology to create an image that moves the viewpoint as if the camera itself is moving from just one image is born

A technology called the “ 3D Ken Burns effect ” has been developed that uses a

[1909.05483] 3D Ken Burns Effect from a Single Image

https://arxiv.org/abs/1909.05483

Simon Niklaus-3D Ken Burns Effect from a Single Image

http://sniklaus.com/papers/kenburns

You can see what the developed 3D Ken Burns effect looks like by watching the following movie.

The original image was taken from a little distance between a woman in a wedding dress and a flower girl.

With the 3D Ken Burns effect, an image is created as if the camera is approaching from the original image. Unlike a simple “magnify” image, distant objects in the background are not magnified much, while nearby objects are magnified significantly. In addition, the camera height appears to change in 3D space.

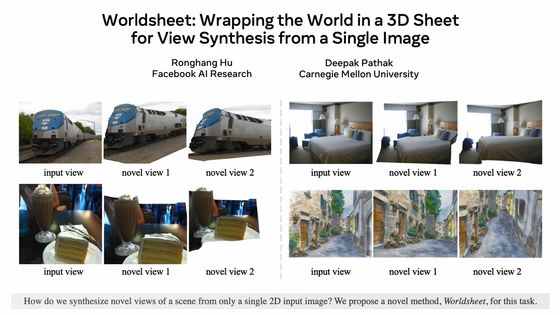

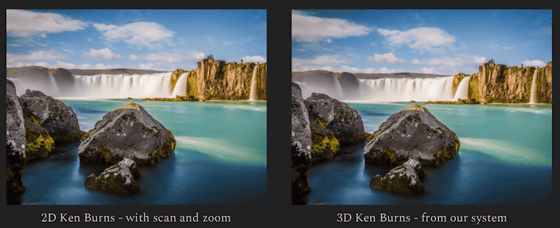

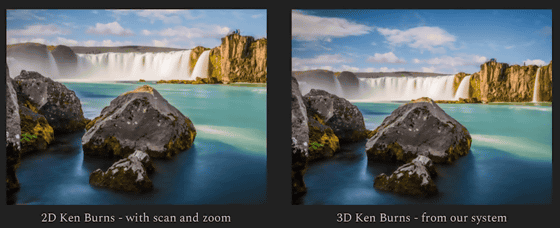

Below is a comparison of the 2D Ken Burns effect (left) and the 3D Ken Burns effect (right), which are just zoom effects. The 2D Ken Burns effect will be expanded to the waterfall in the background, so you can easily recognize it as a simple image enlargement. On the other hand, the 3D Ken Burns effect does not change the position of the background, only the rock in front is enlarged, and it seems that it changes to the height of the viewpoint, so it is a picture taken as if a drone for aerial photography is approaching Looks like. It takes just a few seconds to apply this 3D Ken Burns effect, and you can use the 3D Ken Burns effect to create a movie that looks like the camera is approaching.

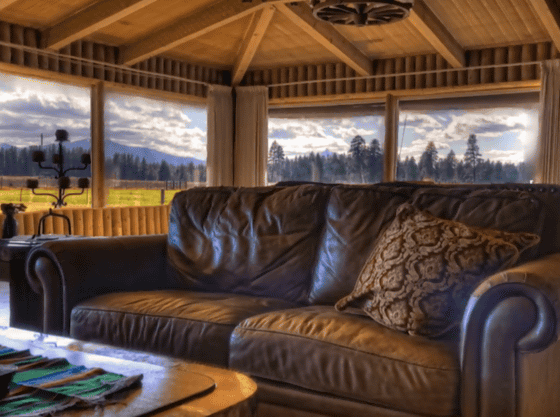

The 3D Ken Burns effect uses perspective principles to enlarge the foreground larger than the background. In the example below, you can see that the background seen through the window is almost unchanged, but the foreground sofa is greatly enlarged.

Using 32 virtual 3D spaces published in the

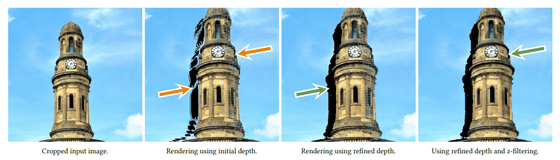

The three types of neural networks determine the point cloud in the image and the depth at each point from the learning results. The 3D Ken Burns effect calculates the magnification of objects in the image that appears to have moved the camera separately from the depth value. Also, because the object may crack or distort during the expansion process, the research team also developed a correction system that is automatically applied according to the situation.

Simon Nicolaus, the lead author of this paper, was majoring in computer vision and deep learning at Portland State University. Nicolaus wrote about the paper during an internship at Adobe Research , an Adobe research institution, and co-authors Ron May and Jimei Yang are both Adobe researchers. Nikolaus's is considering the release of code and data set of 3D Ken Burns effect that with you, but in an article creating point is that nobody has obtained the approval of Adobe.

Related Posts:

in Software, Posted by darkhorse_log