A model of AI's automatic sentence creation tool `` GPT-2 '' said to be `` too dangerous '' will be released to the public

by

The AI-based sentence generation tool “ GPT-2 ” was able to create too advanced sentences, so it was judged as “too dangerous” and its publication was postponed. GPT-2 was released in stages, with a small model in February 2019 and a medium model in May, but a larger model with 747 million parameters has been released.

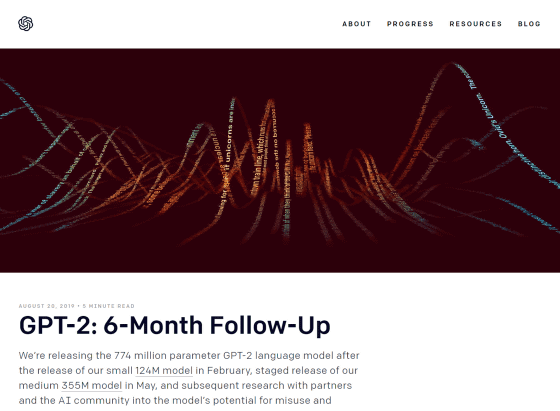

GPT-2: 6-Month Follow-Up

https://openai.com/blog/gpt-2-6-month-follow-up/

GPT_2_August_Report.pdf

(PDF file) https://d4mucfpksywv.cloudfront.net/GPT_2_August_Report.pdf

OpenAI developed an AI model `` GPT-2 '' for text generation in February 2019, but because it creates too advanced sentences, it is judged that `` a big risk due to abuse is born '' `` too dangerous '' OpenAI postponed the detailed announcement of GPT2. For this reason, all four developed models were not released in February, and only the smallest 124 million parameter model was released, and then 355 million medium-sized parameter models were released in May. Is announced.

This decision of OpenAI has become a big topic, and experts have pointed out that “the postponement was a mistake”. Also, in March 2019 OpenAI received a billion dollar (about 110 billion yen) investment from Microsoft and launched a new company, so the view that it was just a stunt to attract attention There is.

In this trend, on August 20, 2019 in local time, OpenAI announced GPT-2 with 747 million parameters. On the other hand, the largest model with 1.5 billion parameters has not yet been announced. OpenAI has also announced open source legal agreements on GPT-2 and has also revealed that it has model sharing partnerships with several companies.

by Dung Anh

The reason why OpenAI postponed the release in the announcement is that 'the performance of dimensions such as 'Certainty to produce consistent text' tends to improve with the model size. We have four GPT-2 models We decided not to publish at the same time because we were concerned about the misuse of the larger model, which would allow us to analyze risk and gain more knowledge from the smaller model. I was able to incorporate it into the model. '

In the latest survey of Cornell University, which was announced on August 2, 2019, that 70% of people who read the sentence that GPT-2 has generated has misunderstood the sentence that it is article of The New York Times has come out results . From these survey results, OpenAI is very cautious about language model announcements.

In order to prevent GPT-2 from being abused, OpenAI is working with security experts to monitor the use of GPT-2 by humans. “We will conduct research to automatically detect the possibility of prejudice and misuse. ' He also announced that he has partnered with the University of Oregon, the University of Texas at Austin, the Middlebury International Graduate School, and Cornell University to safely release models like GPT-2.

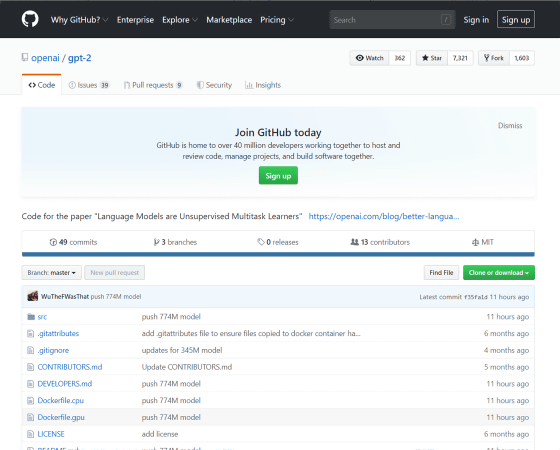

The code base of GPT-2 with 747 million parameters can be confirmed from the following.

GitHub-openai / gpt-2: Code for the paper 'Language Models are Unsupervised Multitask Learners'

https://github.com/openai/gpt-2

Related Posts:

in Software, Posted by darkhorse_log