`` Hate speech detection AI '' may conversely promote racism

by

Google's sister company “ Jigsaw, ” a former Google think tank that works on online censorship and cyber attacks, is pushing forward with the Perspective, a machine learning system development project that detects hate speech on SNS. However, researchers at the University of Washington announced in a paper that the Perspective algorithm could conversely promote racism.

The Risk of Racial Bias in Hate Speech Detection

(PDF file) https://homes.cs.washington.edu/~msap/pdfs/sap2019risk.pdf

Training bias in AI 'hate speech detector' means that tweets by Black people are far more likely to be censored / Boing Boing

https://boingboing.net/2019/08/14/white-aligned-english.html

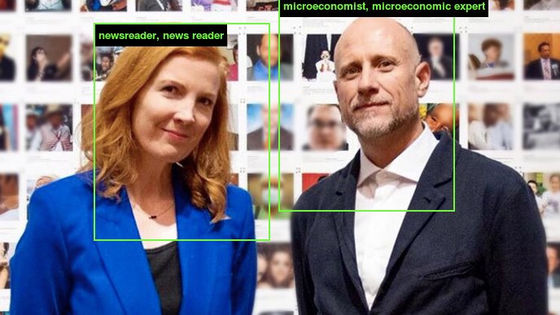

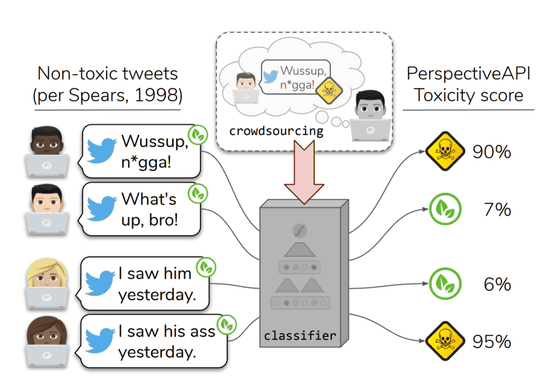

A research group at the University of Washington trained Perspective's AI as datasets of over 100,000 tweets that were previously labeled as “no problem” or “hate speech” by humans. Then, when tweet speech was detected using Perspective's API, about half of the tweets without any problem were determined to be harmful.

According to the research group, many of the tweets that were mistakenly considered harmful were from African-American users. The research group trained Perspective as a dataset with 5.4 million tweets, but it was still determined that African American users' tweets were about twice as harmful as other races. It seems.

African American users often use Black English (AAVE, African American Vernacular English) to tweet. For example, “ Nigger ”, which is generally regarded as a discriminatory expression, is used as a call to each other in black English and has no discriminatory meaning. However, Perspective's API, which cannot distinguish between general English and black English, mistakenly classified about half of black English tweets as harmful content. Black English tweets are about 1.5 times as likely to be pointed out as harmful content.

The research group also pointed out that the perspective dataset was labeled by humans. In the first place, because the data set labeled by humans was biased, it was systematically mistaken for black English tweets as an aggressive one. Claims to have been expanded.

The research group pointed out that Perspective's algorithm itself may help prejudice against African Americans, saying, “To avoid unintentional racial discrimination in hate speech detection, pay special attention to the effects of language differences. Should pay. '

Related Posts:

in Software, Posted by log1i_yk