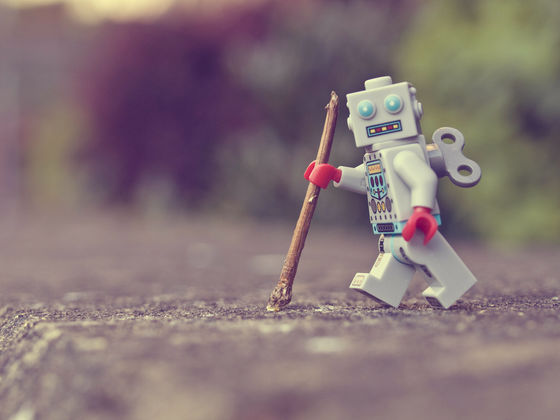

Google promotes Internet standardization of 'robots.txt' which is indispensable for website management

Search engines such as Google and bing can search information on various sites because bots called crawlers automatically crawl the sites. In order for the site administrator to control site crawling by this crawler, a text file called '

draft-rep-wg-topic-00-Robots Exclusion Protocol

https://tools.ietf.org/html/draft-rep-wg-topic-00

Official Google Webmaster Central Blog: Formalizing the Robots Exclusion Protocol Specification

https://webmasters.googleblog.com/2019/07/rep-id.html

Robot exclusion protocol (REP) using robots.txt was invented in 1994 by Martin Custer, a software engineer in the Netherlands. Although search engines use crawlers to automatically crawl sites to gather information, they could overwhelm the site's servers. Therefore, the mechanism of REP is to reduce the load on the server by controlling the crawling of the crawler with a text file called robots.txt, and to optimize the crawling by the search engine.

The robots.txt has been in widespread use for 25 years, and as of 2019 nearly 500 million websites have been used, becoming de facto standards. However, it is difficult to define crawler control correctly in robots.txt because robots.txt rules formulated only by volunteers have no formal standard, and crawlers differ in their interpretation of robots.txt. It has been said.

by

So, Google documents how REP is adopted today and submitted a draft of robots.txt to the Internet Engineering Task Force (IETF), which develops standards for various technologies used on the Internet . The REP draft is not only Google developers, but also Caster, the creator of REP.

Based on the rules devised in 1994, Google's devised standard essentially defines undefined scenarios for analysis and matching of robots.txt, and extends it in line with the modern web environment. Furthermore, in order to unify the notation of robots.txt, the syntax is defined more clearly. As an example of the specification, Google has listed the following four points on the official blog.

1: You can use robot.txt with any URI- based transfer protocol such as FTP, CoAP, etc. as well as HTTP.

2: The maximum file size to be applied is set to 500 KB, and contents exceeding the file size are ignored.

3: The request is cached for up to 24 hours. It is possible to change the cache time by using the Cache-Control header.

4: Unauthorized pages will not be crawled if robots.txt can not be accessed due to server failure.

The standardization of robots.txt is very important for crawler developers who use robots.txt. This is the stage when the draft was submitted to the IETF to the last, and it can not be said that it has been formally standardized, but if it is standardized, the developers and site administrators will have robots.txt under the unified specification. It becomes possible to use it.

by Nuwandalice

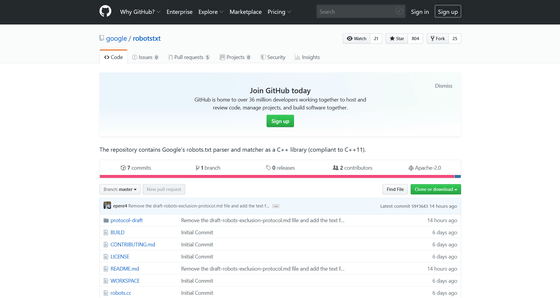

The robots.txt parser and matcher library are open sourced and published on GitHub.

GitHub-google / robotstxt: The repository contains Google's robots.txt parser and matcher as a C ++ library (compliant to C ++ 11).

https://github.com/google/robotstxt

Related Posts:

in Software, Web Service, Posted by log1i_yk