Google announces AR function that can make Google search appear in real space in 3D

Google has announced a new AR function that can display 3D models displayed in search results in real space, that is, in front of you in a full-size view. Internet shopping will be easier than ever before, and the real thing will be displayed with the scale and realism that 2D did not realize, further expanding the possibilities of the Internet.

Helpful new visual features in Search and Lens

First, about the AR function that is scheduled to start with Google search from the second half of May 2019. This means that 3D objects displayed in Google search can be placed in the real world. The following movie will give you a good idea of what AR features look like.

With new AR features in Search rolling out later month, you can place, view and interact with 3D objects right in your own space. # Io19 pic.twitter.com/Q61U0r2Hvg

— Google (@Google) May 7, 2019

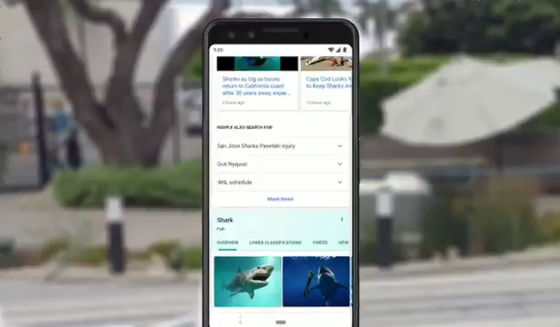

What appeared in the Google search is a shark image and ...

Shark 3D model. Click the button 'View in 3D'.

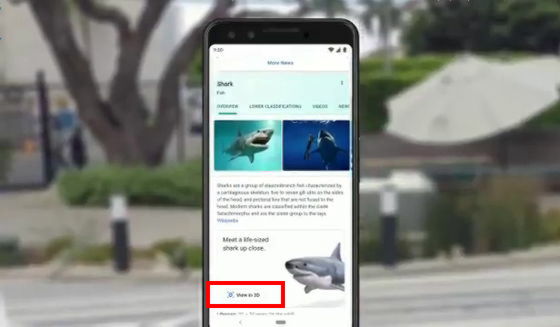

Then, a 3D model of the shark was displayed in such a feeling.

You can view the model from various angles by touching the screen with a finger.

When you press 'View in your space' ...

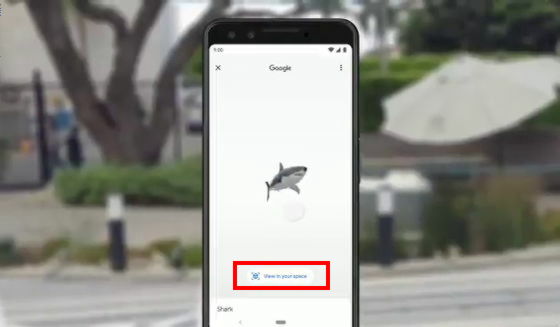

It is possible to make a shark appear in the space where you are using AR.

Atmosphere as if there was a shark in front of you. Because the actual size is reproduced, you can know the search target in 2D or more in detail and realistically.

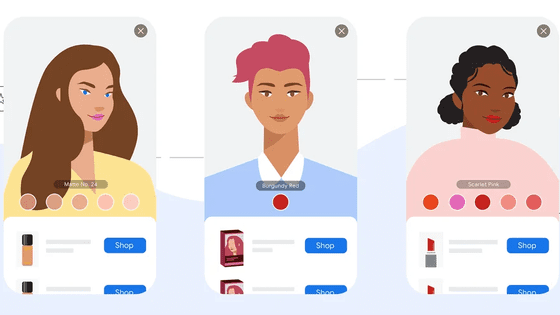

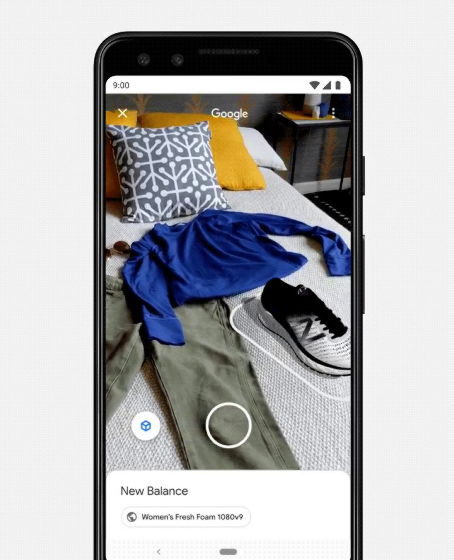

The AR function is also very useful for online shopping.

Often, seeing is understanding. New features in Google camera and computer vision and AR to overlay visual information onto your physical surroundings →

https://t.co/rrvrEE60Hl # io19 pic.twitter.com/yCEO1gIky4 — Google (@Google) May 7, 2019

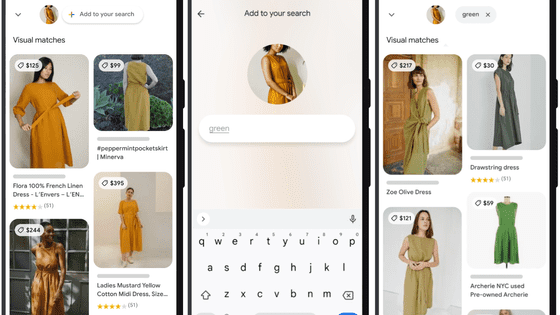

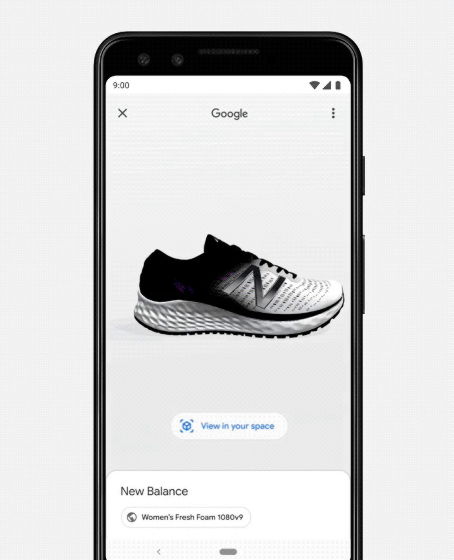

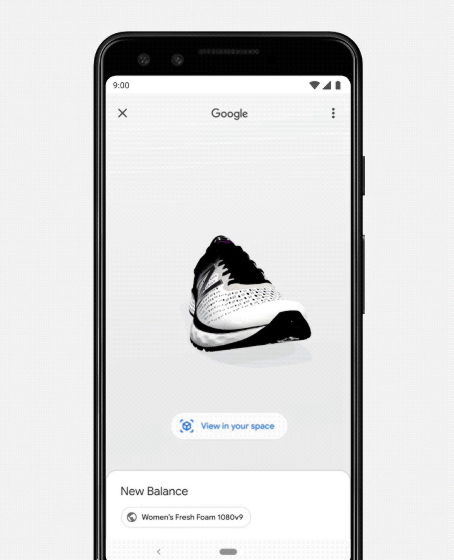

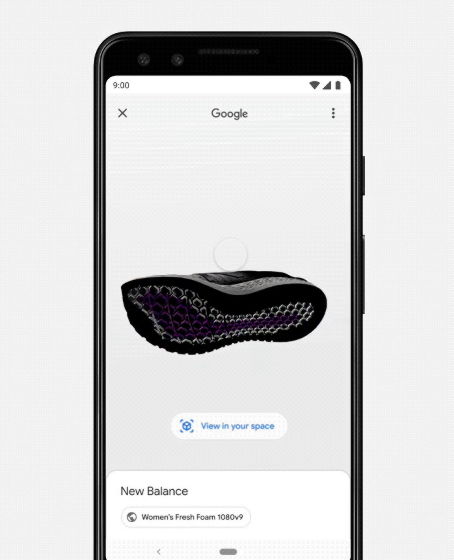

You can see the shoes you want in detail from various angles ...

If reflected in the real world, you can also check the combination with your hand-held clothes.

In addition, Google's development team is developing in collaboration with NASA, New Balance,

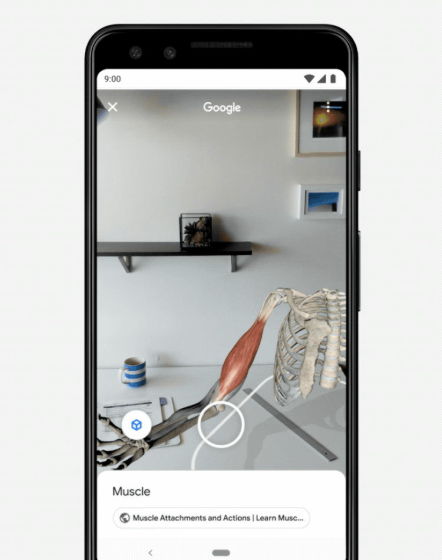

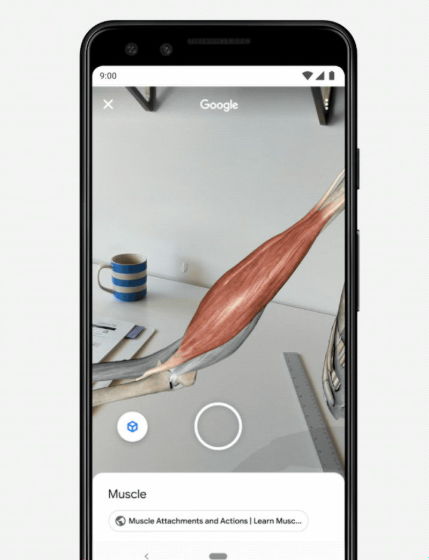

For example, in the visible body of the anatomy app, you can view muscle models in front of you & at full size, and see exactly how muscles react to joint movement. It is useful not only for shopping but also for studying.

You can observe how the muscles contract and stretch from various angles.

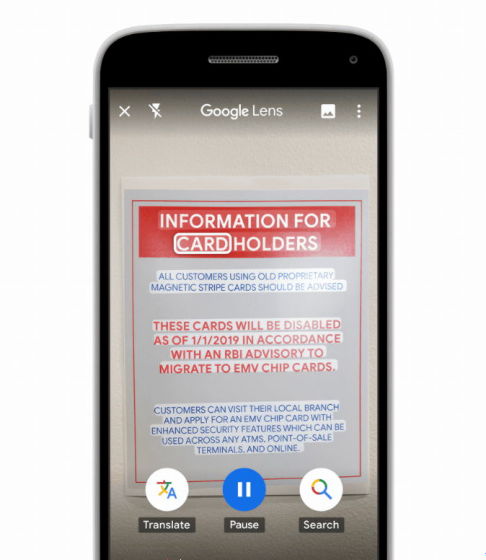

And one more feature related to 'vision' was announced a new feature of the

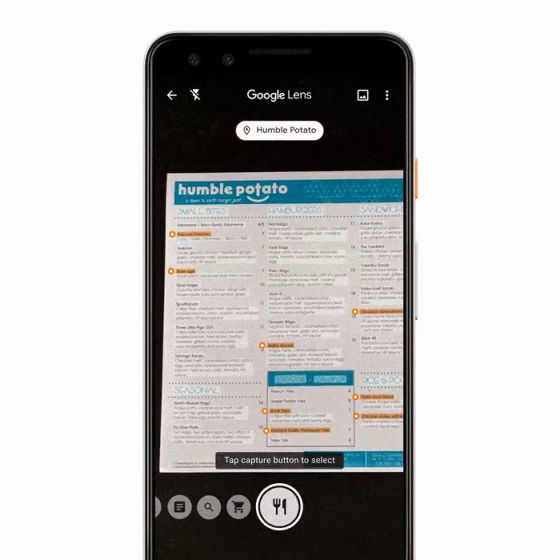

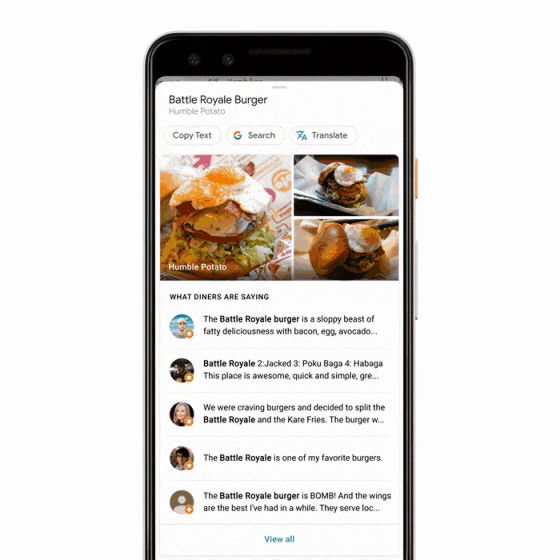

For example, if you go to a restaurant and shoot a menu with a Google lens, it will highlight the popular menu. You can see the situation from the following.

'S Special Today:. Google Lens ???? ️ Automatically Highlighting What'S Popular On A Menu, When You Tap On A Dish You Can See What It Looks Like And What People Are Saying About It, Thanks To Photos And Reviews From Attogooglemaps . # io19 pic.twitter.com/5PcDsj1VuQ

— Google (@Google) May 7, 2019

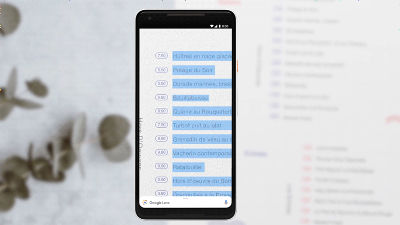

When you capture a physical menu with a camera ...

After some time, some menus have orange highlights. This is a popular menu.

As each menu name is pressed, photos and reviews are displayed like this, so the probability that you can definitely eat something delicious in your first shop will increase. First of all, Google lens recognizes all menus from the contents such as font, style, color and description, and then matches the name with the related photo, rating, etc. in comparison with the restaurant review of Google map. It has become.

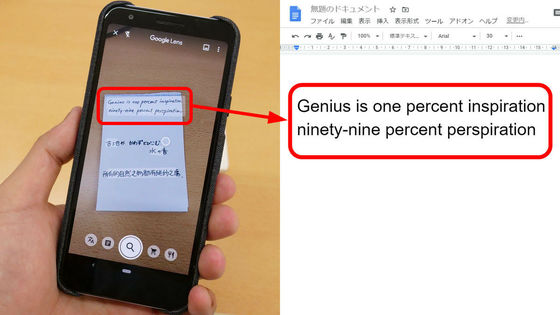

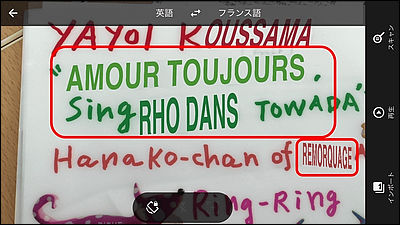

And with '800 million adults all over the world having trouble reading bus schedules and bank writings,' Google loudly reads when they point the camera at the text, and the text is high. Also equipped with new features that will put the light. It is a useful feature for people with visual impairments, but tapping on a word also lets you know its definition, so it's also appreciated by travelers. Google is planning to use this feature from the Google lens that is installed in

Also, Google has announced a project called 'Live Relay' to enable people who have difficulty talking and listening to use the phone. It converts voice to text in real time, but people who are placed in 'a situation where you have an incoming call but it's difficult to talk' will allow you to communicate over the phone while preserving your privacy. .

Live Relay: Easier phone calls without voice or hearing

https://blog.google/outreach-initiatives/accessibility/live-relay-phone-calls-io/

In addition, a project 'Project Euphonia' is underway to solve communication problems for people with language problems due to various diseases such as stroke and multiple sclerosis. Even if you can not speak well, Google's software using AI makes it possible to communicate smoothly with people around you by instantly converting what you've talked to.

How AI can improve products for people with impaired speech

https://blog.google/outreach-initiatives/accessibility/impaired-speech-recognition/

Related Posts:

in Software, Web Service, Video, Posted by darkhorse_log