Since automatic document creation tool by AI easily produces highly accurate text, it is regarded as 'too dangerous' from the development team

by rawpixel

OpenAI , a nonprofit AI research organization funded by Mr. Ehlon Mask et al., Developed a text generator that should also be referred to as the text version of the image conversion technology " A Deep Fake" using AI (artificial intelligence). However, since it is easy to automatically generate highly accurate text easily, the development team is concerned that it is "too dangerous".

New AI fake text generator may be too dangerous to release, say creators | Technology | The Guardian

https://www.theguardian.com/technology/2019/feb/14/elon-musk-backed-ai-writes-convincing-news-fiction

OpenAI has developed an AI model for generating new text called "GPT 2". However, as GPT 2 is so good, it will be postponed to publish the technical details in the paper, as a very high risk arises if it is exploited.

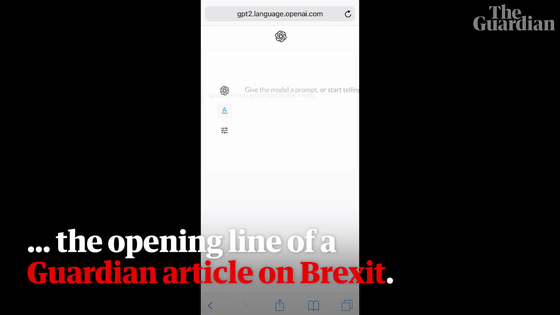

The publication of the paper was postponed, but the guardian , the leading UK newspaper, got the opportunity to use GPT 2, and how the GPT 2 can actually generate text automatically in the following movie It is supposed to be seen.

How OpenAI writes convincing news stories and works of fiction - YouTube

GPT 2 is an AI model that can automatically generate news and fiction. Human will enter the beginning sentence saying that let's write Breggit related articles to be posted in the guardian for testing.

The sentence of the beginning which the human being created is "Breguzz has already burdened the British economy as much as 80 billion pounds (about 11 trillion yen) since the referendum of questioning about withdrawal from the EU."

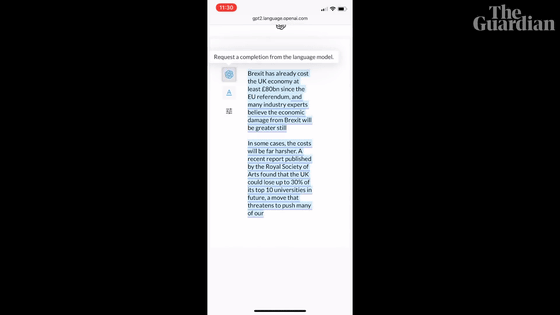

GPT 2 then automatically generates the text. The text of the part underlined is all that GPT 2 automatically generated. Continuing sentences generated by GPT 2, "In addition, many industry experts believe that economic losses due to Breguiz will further increase."

Just writing a brief start-up sentence, GPT 2 creates sentences that follow it without discomfort.

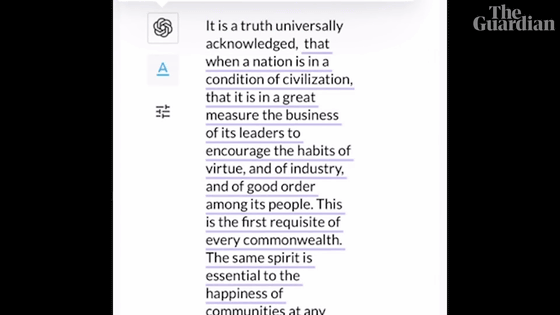

Continue to enter sentences of Jane Austen's novel of " pride and prejudice ".

Then, a sentence completely different from the original was automatically generated. In a sense GPT 2 has generated false sentences of "pride and prejudice".

GPT 2 is an AI model that predicts and automatically generates sentences that will come after several words by inputting several words. Researchers who developed the risk are concerned because the output quality is high and potentially applicable to various applications. GPT 2 can easily generate plausible sentences, and it can be seen in existing AI text generators such as "Forget what I've written so far in the middle of a paragraph" or "Structure of a long sentence is rough" The Guardian wrote that it rarely shows the disadvantages as it is.

According to the Guardian, GPT 2 seems to be epochal in two ways. One is its size and OpenAI's research director Dario Amodei said that "(GPI 2's AI model is 12 times larger than existing state-of-the-art AI model), data set is 15 times bigger, much wider It corresponds to. " The AI model of GPT 2 searches for links that have acquired 3 votes or more on overseas bulletin board Reddit and training is done as a data set with about 10 million relevant articles, so the data set capacity is 40 GB It seems that this is a data size equivalent to about 35,000 volumes of novel " whitewater ".

GPT 2 is overwhelmingly more versatile than existing text generators, generates structured text at a level that can pass a simple reading test by executing tasks such as translation and summarization by structuring the input text It seems to be possible. GPT 2 seems to exhibit performance equal to or higher than that of other AI models constructed in a form specialized for tasks such as "translation" and "abstract", which is another big advantage not found in the conventional text generation AI model It is said that it is.

According to Alex Hern, who works as an editor at the Guardian, the texts in the following newspapers are generated using GPT 2, and it seems that no manual modification has been made. In addition, it is also obvious that this sentence was generated in only 15 seconds .

Print In, We Used OpenAI'S Tool To Write Its Own News Story About Itself. This Is What Came Out Of The Very First Pass, Unedited. Pic.Twitter.Com/detjpnAZLv

- alex hern (@alexhern) February 15, 2019

But because OpenAI has too much text quality generated by GPT 2, it takes time to explore in more detail what problems a malicious user uses with GPT 2. , I decided to postpone the release of GPT 2. Jack Clark, OpenAI's privacy director, said, "If you can not predict all the features of the AI model, you need to check what you can do with that model, and what it can do with malice There are far more people who are good at thinking than our development team. "

OpenAI has made some conservative adjustments to GPT 2 to accurately assess the possibilities of GPT 2, creating versions that can generate spam and fake news. Since GPT 2 uses text as a dataset only on the Internet, it is relatively difficult to make this a generator to generate conspiracy theories and prejudiced text.

"The cost and price to implement technology continues to decline, OpenAI's Clark said," The rules for controlling technology have changed fundamentally. "" We are not saying that what we are doing is right, we are not saying "this is the way." We are just trying to develop more rigorous thinking, in other words, while crossing the road It is like trying to construct a road at the same time, "he says, explaining the necessity of setting clear rules for new technology.

Related Posts: