The objectivity of Wikipedia is secured by Internet archives that repair millions of broken links URLs

Internet archive aiming at preserving all kinds of information on the Internet has worked to restore URL link which broke link in order to ensure the objectivity of information written in Wikipedia and to be in a verifiable state It is.

More than 9 million broken links on Wikipedia are now rescued | Internet Archive Blogs

https://blog.archive.org/2018/10/01/more-than-9-million-broken-links-on-wikipedia-are-now-rescued/

One of the goals of Internet archive management is to "make the web trustworthy". In realizing this important goal, the Internet archive works with Wikipedia of the online encyclopedia to strive to ensure the reliability of Wikipedia.

In Wikipedia that anyone can edit, in order to ensure the accuracy of information, it is required that third parties can verify the information source by specifying the URL in the form specifying the URL etc. However, it is not uncommon for a web page of a source that became an information source to be closed afterwards for some reason, becoming a "broken link" in which a " 404 " error page is returned, and the disappearance of the information source , The accuracy and objectivity of the content of Wikipedia is lost.

Therefore, in the Internet archive, Maximilian Doer who is known as a consultant of the Internet archive, known as "Cyber power" in the Wikipedia community, and Mr. Stefan Ballback who is also a member of the article editing volunteer in the Wikipedia community for a long time In collaboration with the two people, I found a Wikipedia broken link page and immediately developed and operated a program to replace it with a link to a backup page to be saved in Internet archive.

An example where the URL which becomes the source of information in Wikipedia caused a broken link.

In the archive of the Internet archive, information before the loss is left. By redirecting to this previous page, even if the information source is lost, Wikipedia users can check and verify the contents.

The software " IABot " developed by the Internet archive searches each Wikipedia in 22 languages for "broken links" returning 404 errors. When a broken link is found, IABot searches for broken links that are saved in Wayback Machine and so on. After that, the URL which caused the broken link is replaced with the link to the page saved on the internet archive side, and a mechanism is adopted to maintain the verifiability of Wikipedia.

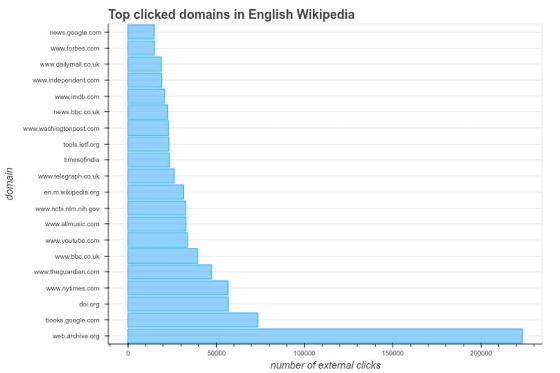

According to data surveyed by the Wikimedia Foundation that runs Wikipedia how users use external links in Wikipedia, the number of clickthroughs of external links is undoubtedly the Internet archive Wayback Machine. The results of the ten-day survey conducted on a trial basis are found to be three times larger than Google Books (books.google.com).

It is said that the Wikipedia community himself corrected 3 million links individually, and a total of 9 million broken links were repaired, as the Wikipedia community itself corrected 6 million external links which became 404 by the operation of IABot for three years.

According to the Internet archive, the concern for "loss of verifiability due to broken link " is due to the problem that 49% of the pages quoted in the Supreme Court ruling clarified in 2013 is broken link It is said that it came to be. It seems that he learned the importance of surely capturing information before breaking the link from here.

In future, it is said that it is required not only to "link broken" but also to deal with the problem that content changes with the passage of time called "content drift". It seems that it is required to secure means to allow the user to know that the content of the linked page displayed on Wikipedia has been changed to a different one from the intent of creating the Wikipedia article.

Related Posts:

in Software, Web Service, Posted by darkhorse_log