'Vid2vid' which automatically generates live-action wind images with AI realized from given contours

In the field "Video-to-Video Synthesis" in which AI generates new movies based on a certain movie and replacing the elements contained in the movie with another one that does not exist, a new technology " vid 2 vid "Was developed.

Video-to-Video Synthesis

https://tcwang0509.github.io/vid2vid/

[1808.06601] Video-to-Video Synthesis

https://arxiv.org/abs/1808.06601

vid2vid was developed by the development team of Massachusetts Institute of Technology and Nvidia experts. You can see how realistic images can be generated by watching the following movies.

Video-to-Video Synthesis - YouTube

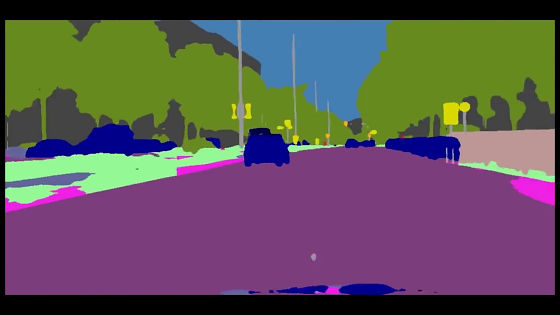

What is given to the computer is a video material that color-codes the elements on the screen as follows. The scenery generated based on this material ......

this. It seems that multiple cars are running in a city-like atmosphere with European atmosphere, but all these are generated by Video-to-Video Synthesis.

However, depending on the scene, the tree may look unnatural, or the shape of the car may be distorted. However, unnaturalness is conspicuous when viewed with still images, but if it is a moving movie, it feels less noticeable.

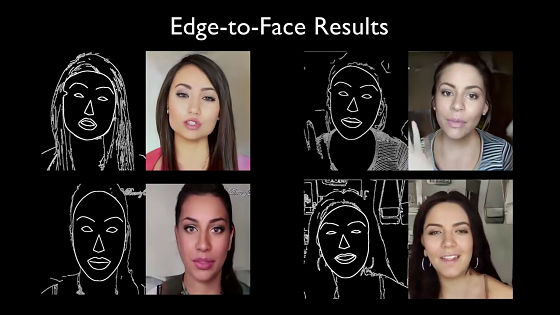

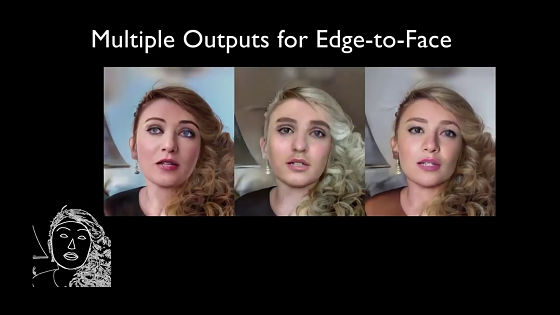

vid2vid said that given the material classified for each element, it is possible to create various images. In this way, the human face can be reproduced from the contouring material of the face element ......

It is also possible to reproduce different persons from the same material up to the color and face of hair and skin.

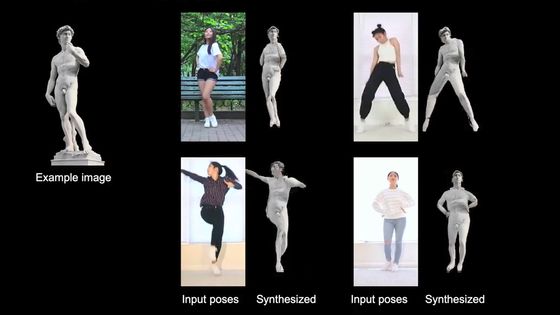

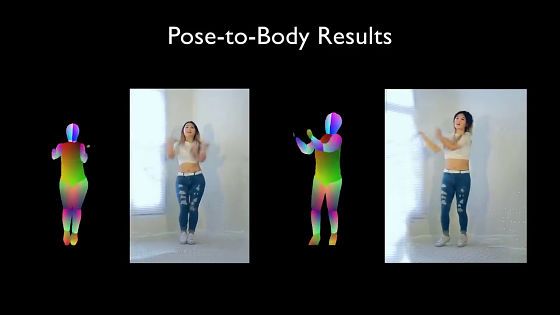

Even in the following dance scenes, people are generated, but the fact that natural shadows are reflected in them also seems to be an element that increases the realism.

It is also possible to replace the background with a scene in the beginning of the car.

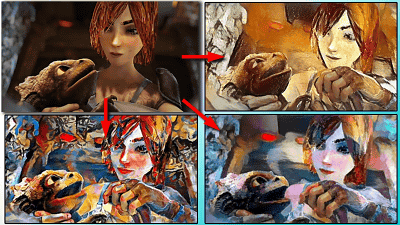

vid2vid is characterized by its high quality even compared with the existing latest technology "pix2pixHD" and "COVST (COherent Video Style Transfer)" etc.

The upper left is the input material, the upper right is pix 2 pix HD, the lower left is the image generated by COVST, but in either case there are unnatural points of movement stand out or the brightness changes strangely, and it is a very natural finish I can not say that. On the other hand, the video in the lower right generated by vid2vid is understood at a glance at the first glance that it is finished in a natural appearance which can be said to be so-called "nursak".

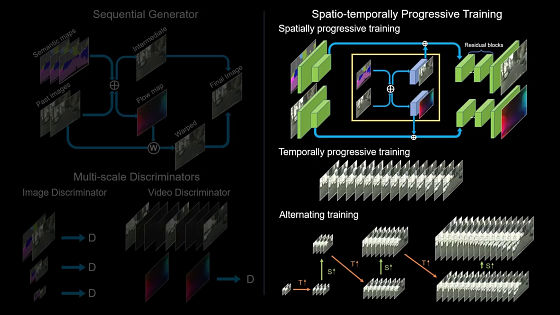

vid2vid was created by learning AI to convert video in a new way. The team uses a "discriminator" that allows the contents to be recognized in each of still images and images, and has AI learn by a method called "Spatio-temporally progressive learning".

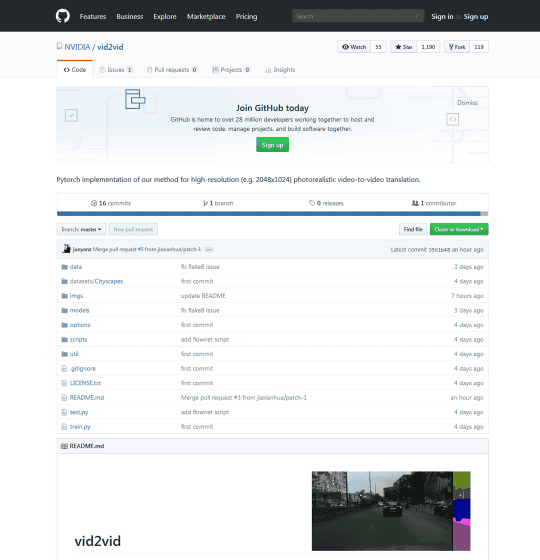

Algorithms and datasets of vid2vid are published at the following link of Github.

GitHub - NVIDIA / vid 2 vid: Pytorch implementation of our method for high-resolution (eg 2048 x 1024) photorealistic video-to-video translation.

https://github.com/NVIDIA/vid2vid

Related Posts: