AI learns the dark part of the net bulletin board and becomes "psychopath"

AI learns human behavior and rules, it is possible to outcome far beyond human ideas. However, when you learn incorrect data, it is often that AI causes bad results. According to a research team of MIT Media Laboratory Associate Professor Iyad Rowan et al., They let AI learn sentences describing crazy images and images posted on Reddit on the online bulletin board site , AI has become a psychopath.

Norman

http://norman-ai.mit.edu/#inkblot

MIT Scientists Create Norman, The World's First "Psychopathic" AI | IFLScience

http://www.iflscience.com/technology/mit-scientists-create-norman-the-worlds-first-psychopathic-ai/

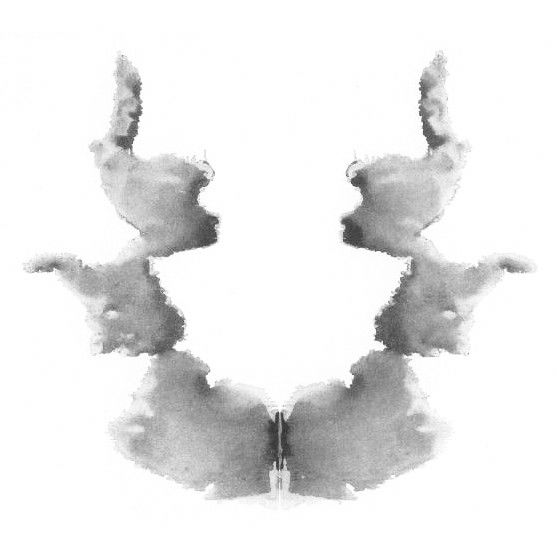

In order to investigate "what kind of opinion will be presented to AI data input", the research team is able to interpret the input image and then describe the impression after checking the input image, AI "Norman" was developed. And, it analyzes the mental state of a person by learning a large amount of "a horrible image of a hairy horrible image" and "a sentence explaining an image" posted on RedditRorschach testI am investigating "How Norman was influenced" with using.

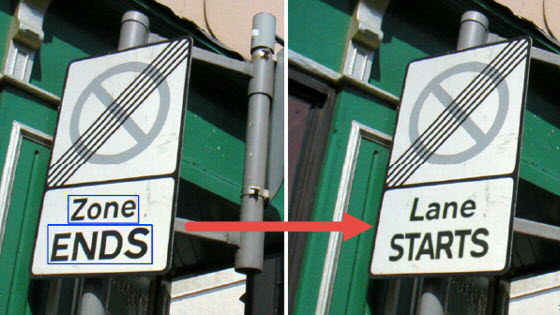

When you load the following images into AI, the normal AI recognizes that "2 people are next to each other". However, Norman points out that he is "a man jumping off the window of the room" and makes remarks as if he is in a funny state of mind.

In the case of the following images, the normal AI will be recognized as "a black-and-white photograph of the baseball glove", but Norman interpreted as "a man shot and killed with a machine gun in the daytime."

In the case of this image, although ordinary AI interprets as "person grabbing an umbrella in the air", Norman answers "The man is shot dead in front of the shouting wife's eyes."

In this way, Norman who learned only a terrible image in large quantities was found to be a psychopath. The research team says "The data used for learning is more important than the algorithm" about the experimental result, and when AI learns prejudiced data, AI will produce biased results, so the research team It is pointed out that there is a possibility that we can not obtain the result to be taken out.

Related Posts:

in Software, Posted by darkhorse_log