DeepMind develops an algorithm to avoid defects "catastrophic oblivion" possessed by neural networks

Improve skills while learning game play "DQN"And defeat professional go-players"AlphaGo"Is DeepMind Google's AI development department, known for the development of such, in order to overcome the drawbacks conventional neural network faced, algorithms can be continuously learning by storing data"Elastic Weight Consolidation"Was developed.

Overcoming catastrophic forgetting in neural networks

http://www.pnas.org/content/early/2017/03/13/1611835114.abstract

Enabling Continual Learning in Neural Networks | Deep Mind

https://deepmind.com/blog/enabling-continual-learning-in-neural-networks/

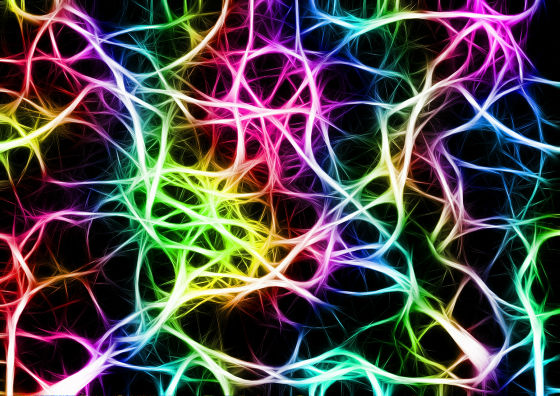

Deep learning is known as the most successful machine learning technique for processing various tasks such as language translation, image classification, image generation and so on. However, in a deep neural network, it is designed to handle tasks only when a large amount of data is inputted at one time, and when the network processes a specific task, various parameters are set for the task It will be optimized. For this reason, when a new task is introduced, there is a characteristic that the knowledge acquired by the neural network is overwritten, which is called "catastrophic forgetting" It is considered to be one of the limits of the network.

With respect to the structural limitations of the neural network, there is a feature that the human brain learns step by step, learn skills one by one, and apply the knowledge so far to solve new problems. Deep Mind that gained inspiration from the features of human and mammalian learning that can remember such past "skills and apply it to new problems" can remember the problem solving and apply it to the problem to be solved later Algorithm of neural network "Elastic Weight Consolidation (EWC)"Was developed.

Every time you solve a task, EWC scores how important that data is. And a mechanism is adopted in which memory is protected from overwriting in proportion to the score indicating its importance. In other words, unimportant data is erased by overwriting it like the neural network so far, but because data is protected in important tasks, overwriting the learned contents or re-computing the large calculation cost again You can learn new tasks without breaking it.

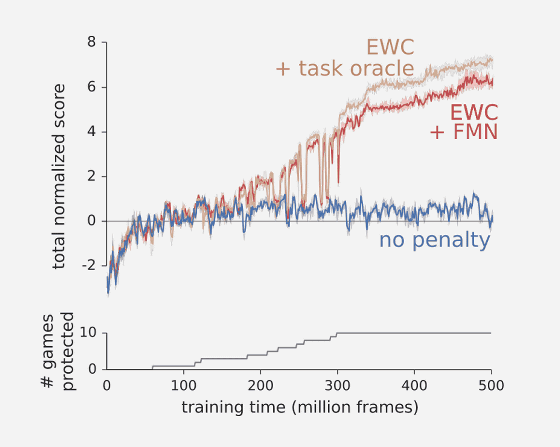

DeepMind is experimenting with the game of Atari to test the validity of EWC. Learning individual games from scores alone is a difficult task themselves, but learning multiple games consecutively increases the level of difficulty as individual strategies are required for each game. In a normal neural network that does not use EWC, as in the blue graph, once the game is over, the data is overwritten by fatal forgetting and the score does not rise, whereas if you activate EWC, you forget easily Without it, DeepMind says that learning was possible even as the game changed one after another.

The current computer can not respond according to the data and can not learn in real time, but according to DeepMind this research shows that it is possible to avoid fatal forgetting in the neural network. Deep Mind says that this research symbolizes the first step towards program development that can be learned more flexibly and efficiently.

Related Posts:

in Software, Posted by darkhorse_log