How should humans think when a robot or AI becomes "an existence equivalent to having a right?"

As research on computer-based artificial intelligence (AI) progresses, people are increasingly asked to think about what "personality" is. When the "ego" that a human being has come into existence in computers, it seems that they should be given "right", a movie asking such a thing explains various problems "Kurzgesagt - In a NutshellIt is published by.

Do Robots Deserve Rights? What if Machines Become Conscious? - YouTube

The toaster anticipates the type of toast you want to eat. Such a future may come.

The toaster looks for it on the Internet looking for a new and exciting toast by myself.

You may also want to ask you about the events of the day and talk about new developments in toast technology.

Then, at what level will the toaster be "the same as a human"? What is the timing when the toaster starts to feel "there may be feelings"? Also, in that case, will it be said that turning off the power will "kill" the toaster?

Artificial intelligence already exists around us. Artificial intelligence arranges for shops to stock sufficient items and provides net advertisement suitable for individuals.

However, it is sufficiently thought that it will be necessary to cope when the boundary between the personality created and the real personality becomes ambiguous.

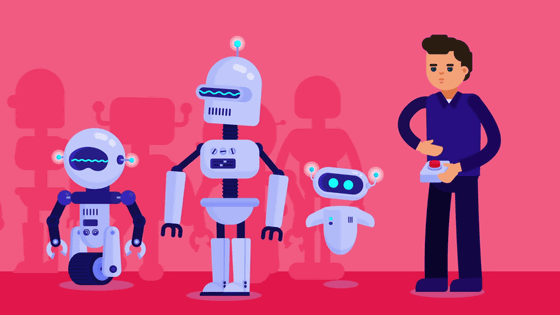

Is there a machine worth giving "right" at the moment? Probably not for now. But if it existed, we are not ready yet.

Many claims that there is a right to human beings or animals are based on the presence or absence of consciousness. Unfortunately, no one knows what consciousness is.

Whatever the exact definition, we know intuitively about consciousness. It is because I have experience of having consciousness. We are aware of ourselves and its surroundings and we know how unconscious the situation is.

Some neuroscientists believe that they can create "consciousness" in advanced systems.

Based on that idea, if your toaster hardware was powerful enough, you might have an ego.

At that time, do you need to give rights to that toaster?

Rights are given to those with consciousness. Because things with consciousness have the ability to suffer.

The robot will not suffer and it will be so long unless we program so.

Human rights are deeply involved in our body's program. For example, we hate pain. That is because the brain has evolved to let us live us so that we will not touch hot fire and escape from predators.

That's why we celebrated "rights" to protect ourselves from painful things.

But does the toaster that can not move in the first place feel disgusted being trapped in the cage?

If there is no fear of death, will you mind being broken down?

If self-esteem does not exist, would you mind if you get insulted?

On the other hand, what happens if you program the robot to feel pain and feelings? Why do you program to be more aware of it than to suffer as you prefer joy rather than fraud? Could this make them enough human?

Many technicians believe that explosion of technology will occur when artificial intelligence learns about new artificial intelligence and can create better artificial intelligence than himself.

What if artificial intelligence finds the need to program pain the same as what evolutionary biology revealed that the ability to feel pain is necessary for almost all living things? Does the robot deserve to have rights?

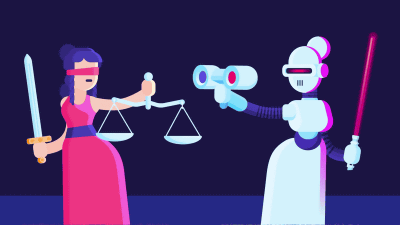

But now I do not worry too much about the risks that a very smart robot will bring to us, but rather may worry about the risks we bring to them.

The identity of mankind is based on the idea that we are specially unique and an exception with the right to control nature.

Historically human beings have denied that other beings feel pain as human race is.

In the midst of the scientific revolution, called the father of modern philosophyRene DescartesClaimed that animals are simply machine dolls (or "robots"). The immorality of hurting a rabbit is the same level as punching a stuffed animal.

And regarding many of the greatest crimes against humanity, perpetrators have justified to deprive others of their rights, "victims are close to animals, not civilized human beings."

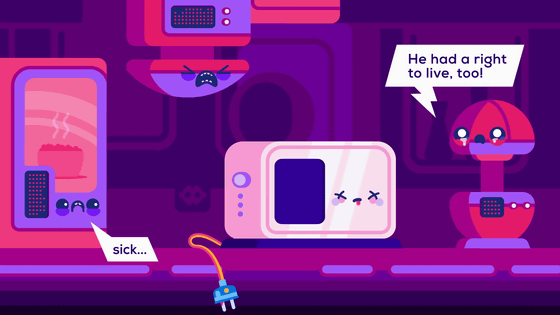

Even more problematic is that we have economic advantages by denying the rights of robots.

If we were able to force our conscious artificial intelligence to serve us, the chances of creating a profit would be great.

That is what we did in the past as well. Violence was used to force people to work.

And I justified mandatory by saying that "God says that we need money."

Slavers insisted that slavery would have benefits for slavery as well. They can live under the roof, that you can teach Christianity.

The men who opposed the women's voting right insisted that "women really want to leave difficult judgments to men".

The farmers kill the animals in their young to make it food, but they insist that they can be justified by saying "they are given baits and are looking after them."

If the robot has an ego, there is no doubt that an allegation that "they should remain without rights" will occur.

Artificial intelligence poses a serious problem against the boundary of philosophy. Does a robot having a sense have ego and deserves rights? Also, we are asking the fundamental question "What are we human beings?" "What do we have the right on grounds?" Regardless of how we think, it may be necessary to solve this question in the near future.

What will we notice by robot's request for rights?

A detailed explanation of this problem is explained in detail in the YouTube movie of In A Nutshell at the beginning. Subtitles can be changed to Japanese.

Related Posts: