How to think about how to realize 10,000 concurrent users on a single server machine and how to think

At CEDEC 2012What kind of server configuration does Dragon Quest X make to achieve "one world"?As a result, the configuration of the server that supports Dragon Quest X, which is an online work, was spoken, but the game server & network engine "ProudNet"Mr. Hyunjik Bae, CEO of Nettention, gave a lecture on real-time network synchronization technology for large players through experiences of server development and usage of Korean online games.

How to realize 10,000 simultaneous MMO users with one server machine | CEDEC 2012 | Computer Entertainment Development Developers Conference

http://cedec.cesa.or.jp/2012/program/AB/C12_I0284.html

Hyunjik Bae:

Hello. I am a game engine companyNettentionI am serving as representative.

Interpretation:

I will announce it in Korean now. There are many know-how of high-performance server making, but I think I will introduce only one of them. Although I am single, I would like to deeply explain it. First of all, the basics of network programming is necessary to understand this story. Well, I will tell from that story.

First of all, I will introduce myself easily. Since I was a child "Double Dragon"Anger(anchor)""OutrunI liked the game very much. Currently I have spent money on mobile social games. I also tried Galgée and failed. Because the story writer had no romance experience. Currently I live happily with a very beautiful wife and two children. Also I am learning golf with my grandmother. I also like to play the piano and I like traveling.

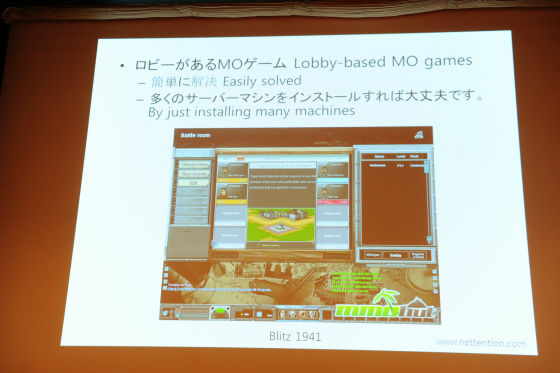

I will get into the main theme. Looking at the server program, there are two kinds of online games. MO online game with lobby, and world is permanent MMO game. A typical MO online game with a lobby is an FPS game, and representatives of MMO games are "Ragnarok Online"is. MO online game with lobby is, it is easy to make even if there are many simultaneous connections. The number of players entering one room is small. Since it is only necessary to make plenty of rooms with few players, you can increase even the server. What you are currently seeing is "Blitz 1941It is an online game lobby. This game is a 100 to 100 chariot game, developed by me eight years ago.

"

"

It is as easy as MO. I divided one world into 20 sub - worlds, one sub - world is one room. There are 1000 players in one room. That way, the world can be made as easily as a permanent MMO. I divided one world into 20 sub - worlds, one sub - world is one room. There are 1000 players in one room. That way, you can handle 20,000 players for one logical server. If you assume that this server CPU has 4 cores, you can fix it as soon as there are five physical servers for one logical server. It is very easy for 1000 players to enter a room. It can also be done with a single thread game server. You only need to run this server for the number of CPUs.

Next, this screenshot shows MMO Society Game "OZ World"is.

It is a game I made 13 years ago. Although the speed of the CPU did not get faster over the last few years, instead the number of CPU cores has increased. However, the demands of the players are very high. The work of the game server for each simultaneous user is increasing. "Diablo 3"How about creating a game with a lot of monsters like MMORPG like MmorPG? Or "Sanko MusouIt will be interesting if something like MMORPG version comes out. This screenshot is my server engineProudNetThe sample MMORPG using, is under development. The number of monsters in this game is much larger than the number of players. It is like "Diablo 3" or "Sanko Musou". So in other words it is a genocide game. In such games, as the number of players increases, the throughput of the server also increases greatly. In a single-threaded server it will be a bit insufficient. The game server itself with many simultaneous users is easy to create. Because it is a server that connects networks only. However it is not too difficult for a client to exchange messages one-on-one with a server. We only have to make a mistake. However, if you have to send a message to more than one client on the server, you must do this for MMO games. However, it will be very difficult if the amount that must be processed in one room is insufficient with one CPU. If this limit is exceeded it becomes very complicated.

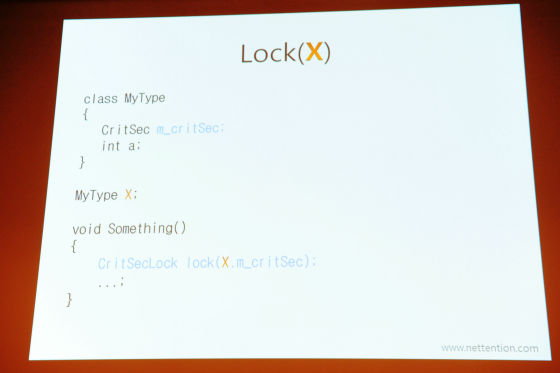

So I will tell you about the complicated process, but before that there are some terms we must define, so I will tell you about it. I think that it will be a lot of expression I will use later, but "Lock (X)" is.

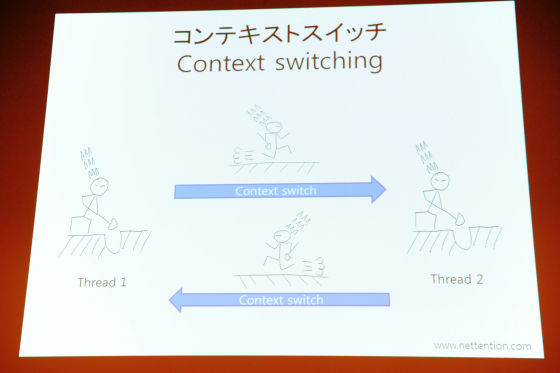

It means to lock this X data. Locking data is to prevent other threads from reading or writing the same data at the same time. As you all know well, the critical section,MutexI will do this role. If your boss is, what happens if you instruct everyone to do more than two jobs at the same time? Of course I am receiving salary, so I have to do it. But normally I think that I will start the next job after I finish one thing first, but what if you say that your boss is getting very involved and that you should progress both at the same time? I think that I will start my resignation. But CPU like idiot interchanges these two things. Do a little bit of the first job, do a little second thing, and do it little by little. In this way iterating and doing two jobs is called "Context switch(Context switching) ".

However, this context switch takes a very long time. The CPU saves the current state to work on other threads. And this process of recalling and saving that state to work on other threads requires a very complicated operation. So it is my opinion that less context switch is better.

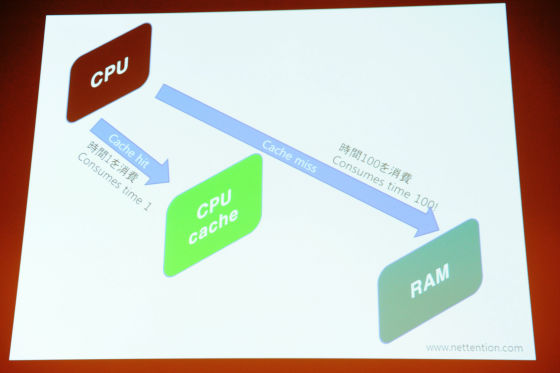

Another time it takes the CPU to read and write the memory. So there are caches in the CPU. I think this is familiar. Depending on the work of the cache, copies of the memory that I used until now are saved temporarily in that cache. The memory stored in the cache is much faster than accessing the RAM memory. So in order to access data with CPU, it is very important whether it is in the cache or not. If there is, if you do not have data in cache, you read it and write it as it is, but if you do not have data in cache, you have to go to memory and bring it. So, the difference in speed between memory in cache and no memory is around 100 times. Therefore, we must use this cache as much as possible. You should maximize cache hits and avoid cache misses as much as possible.

Another,"ContentionConsider the term "competition". I mentioned earlier that I locked the data before the two threads read the same data. For accessing with two same oversects, suppose that these two threads are on different CPUs. In order to access the data, two CPUs fight to lock into the critical section, this is called contention. The contention greatly reduces the speed of the CPU. This is because the two CPUs must network between CPUs in order to access them, so accessing the same data with a different CPU will result in a very low speed. Contention should be avoided as much as possible. Again this is where you have to use care most about server programming.

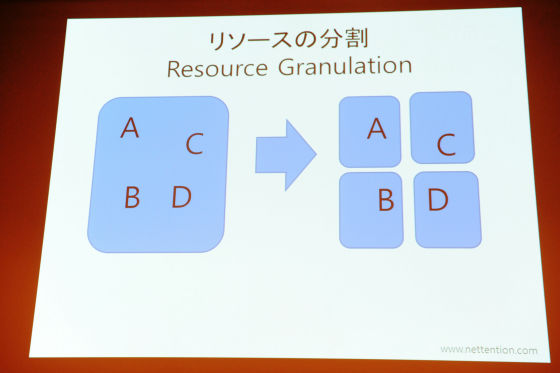

For example, there are data A, B, C, D. It is all different data. Let's suppose that this can be protected with a large lock at once. A thread accesses A, and a different thread accesses B. If these two data look like this, the two threads can not proceed at the same time. It will be a waste of time to do so. Performance will be very slow. So you have to divide the area of this lock as shown on the right.

In this way we say Resource Granulation, Resource Partitioning, but this allows you to work at the same time and finish work earlier. Here a bit of caution is necessary, but if you divide it too small, the number of locks will increase, the performance will be slow and the program will be complicated, so the risk of a programmer making a mistake will also increase I will. So, although it is easy, I introduced very important contents. I think that detailed contents will be explained more than me because many books related to multithreading are out.

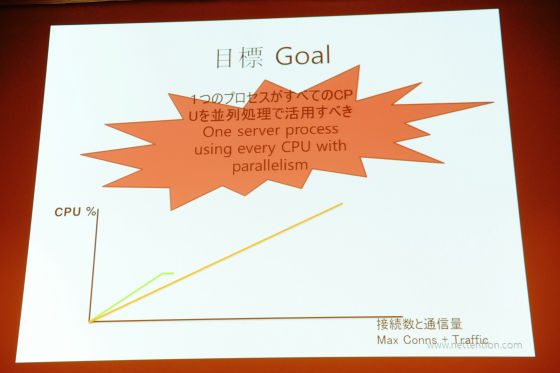

So, I will tell you about the problems I experienced. It is about our server engine "ProudNet". This "ProudNet" already provides a thread pool based user call back function. With our engine, the game developers were able to make multi-core game servers, but there was one problem, our engines themselves did not use multi-core internally. Because of these problems, there was a case that when you increase the number of simultaneous connections, it can not raise its performance above a certain level. It is also possible to make it with a single thread and solve it with a server thread, but in order to solve the fundamental problem, we have to make it possible to use all CPUs in one server process .

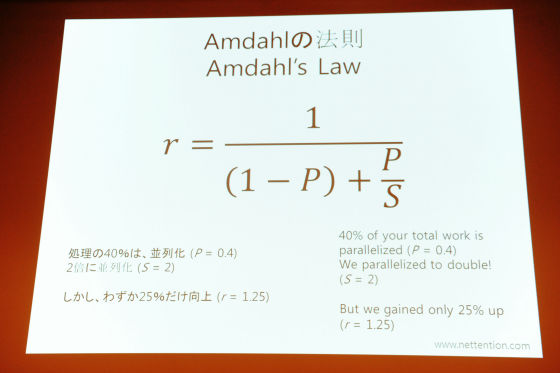

Everybody"Amdahl's law(Amdahl's law) "you know?

This Amdahl's rule is a formula of how much performance will increase when a part is done in parallel in multi-core in the whole processing time of the program, but it doubles 40% of the total processing time If it parallelizes it, how much performance will it rise? If it is this official, only 25% will rise. I can not tell by just using this formula. Let's take a look at the slide.

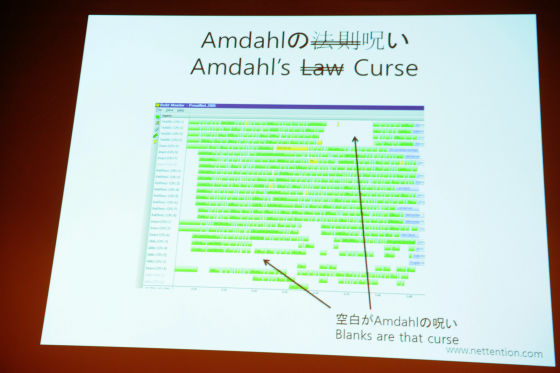

Everyone knows wellIncrediBuildis. It is a very wonderful program and we also use it. According to Amdahl's law, no matter how well you can parallelize, there is a limit to the overall performance improvement. So this is also said to be Amdahl's Law, but it is also said to be "Curse of Amdahl". This blank place seen here is the part of the curse of Amdahl. Our clients have already exceeded 100 companies already. "Makai Village Online"Our engine is also used. However, unfortunately, some of our clients had a structure with only one instance on the server machine. So we had no room for selection. I did not change the API of our engine, it made parallelization in the engine. It is easy to say in words, but this was never easy. Eventually I spent a few months tackling this problem and solved it.

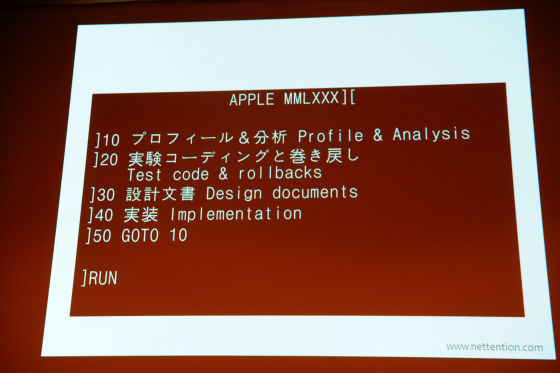

Let's explain how we solved it from here. Everyone, well I do, but programmers, those who make programs do not make late codes absolutely, do they? I think instinctively fast performance. However, the performance of this program is relative. If part of the program is fast, the opposite side will always be said to be late. So, we proceeded with profiling first, testing, testing coating, assembling the design document, and implementing (Implementation).

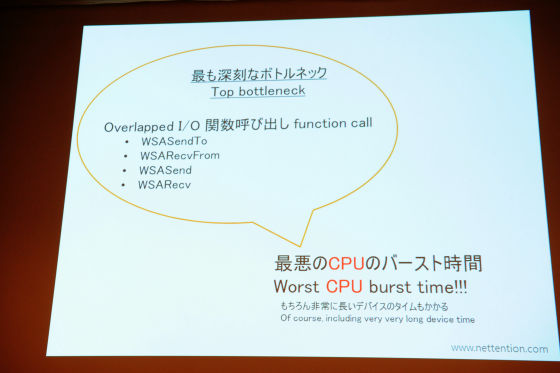

Let's first look at profiling and analysis. The bigger the program, the more complicated the more difficult it is to predict which is slow. In order to know that, we conducted a test program first. I made such a test program that many characters and many players showed tremendous movement. So I used a code profiler. By the way, IGlowCodeAndVisualStudioI often use profilers such as. Or left out of IntelAmplifier XEI thought the software like such was pretty good. So, when I tested it, I got an unexpected result. I found that it took the longest time for this function to call Overlapped I / O on Windows network. I think that everyone is funny, I also thought it was strange at first. As you can see, Overlapped I / O is the best performing method of the game server. It is the same as IOCP. I made it with IOCP. But it is strange why it will take so long. I am sure that there was something wrong, I tested it again and did code analysis. I thought it might be the cause of the block being delayed, but it was not the case. Nevertheless, this function takes the longest time. Why? As you all know, IOCP is the ability to handle the most simultaneous accesses. However, it is surprising that there was a bottleneck in its performance.

Why did this happen? There is a very complex mechanism between user application and operation and CPU main board network device. There is a conversion between kernel modes between them. There are also various event handlers and polling, there is communication between the CPU and the bus, and hardware interrupts are involved here. I think everyone knows what hardware interrupts are, but what is more complicated than that is what happened in operations and in the hard. Everyone, you use socket programs well, do not you think? It is the socket program that changes complex network programming to half sync half async pattern. However, this increases the work in the operation system. It is inevitable that taking socket-related functions completely asynchronously takes time. In other words, this problem can not be solved by the operation system. It's not just a Windows server. LinuxFreeBSDOr, other OSs also have common problems.

Anyway the answer came out. The answer is to optimize asynchronous network functions, that is, invoke asynchronous socket functions themselves. But here again there is a problem, our engine logic is already in a complicated state, it is not easy to optimize this later. So I tested to find an optimization method, if the result was not good, I abandoned it, I made a test program and threw it away. Then I found the best way and I got to the final design document. Let me explain it from now.

What should I do if the performance is performance and bottlenecks are born? First of all, we must manage to eliminate this bottleneck somehow, but there is no way to do it. Then what to do, if you can not get rid of it you have to reduce it. That way, I will introduce one method about how to reduce it.

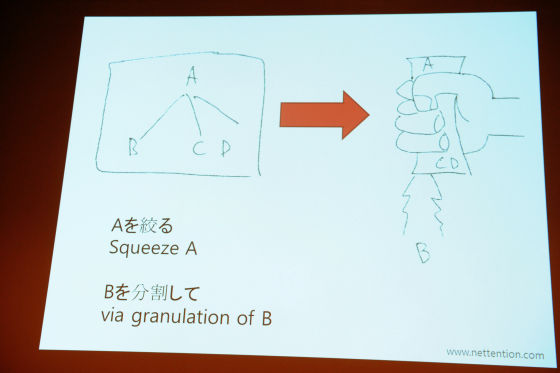

Let's say there is a routine A here. I think that it can be said that this is a function. The routine A has subroutines B, C, and D. A calls B, C, and D inside. Let's assume that A is a bottleneck here.

I measured another once. What is the first processing time in B, C, D? Let's suppose that it is B here and optimize the code. But B is still slow. When there is no more optimization method any more, what should we do?

What if we do not lock resources while B is running? A can not be executed in parallel. But B can run in parallel. Then, as the number of CPUs increases, the probability that so-called threads become idle before execution of A decreases. As a result we can reduce the time it takes for A's lock. This is a very important trick. Even if parallelization of A is impossible, it is a technique to parallelize one of the subroutines under A, thereby speeding up the speed of A. I call this technique with squeeze (Squeeze) or squeeze.

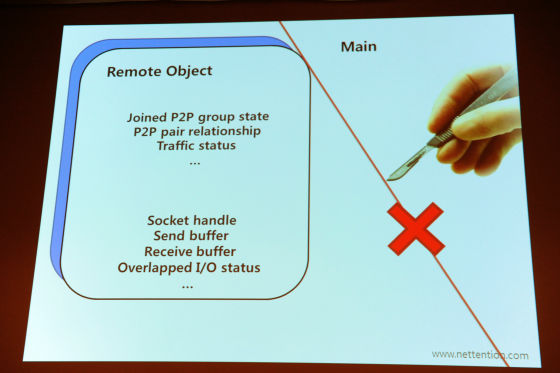

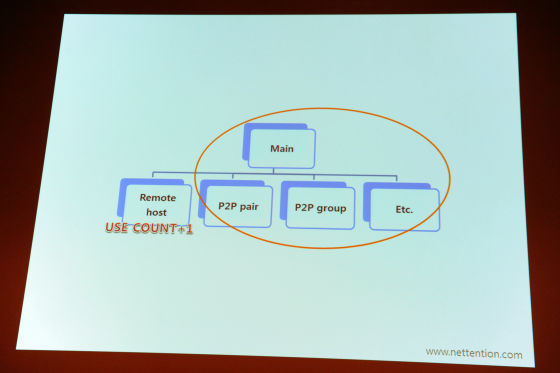

I narrowed A by parallelizing B here. The internal structure of ProudNet is as you can see, first there is the main. There are individuals of various clients connected to the main, and P2P pair, P2P group, Etc. compression information etc. are hanging for each client etc. There was something I knew after ProudNet's code profiling, which means that most of the time was spent here. It took a long time for remote access. Then what should we do. It's about squeezing and squeezing remote objects.

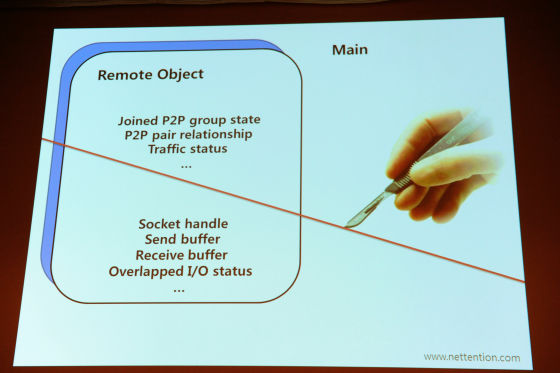

Let's explain the process of squeezing. First I will lock the main. And the point here is that the remote object is unlocked. Then unlock the main and lock the remote. I lock only the remote. It does not mean that you do not have to take your hand anywhere, properly, unnecessarily. This is very dangerous. This section is very important where I cut it.

You know this hero?

It is also very famous in Korea. "Super Doctor KWhat is it? When I first read this manga, "Hokuto no KenI thought that Kenshiro became a doctor. In this cartoon K performed doing two operations at the same time, heart transplant for one patient, and lung transplant surgery on the other. K knew that if you transplanted two at once you knew that you do not have to cut a lot of blood vessels, so transfer the male's heart to a woman and cut the lungs and heart of a brain-dead patient at the same time I moved to a man. That is, it seems easy to split border, boundary was found. , We were the same, there were many blood vessels between the remote object and the other objects. Since it is dangerous to cut here, do not split the entire remote object into resources.

So where will we split? Looking for the part where the blood vessel is connected only a little, it was in the remote object. I found that it was easy to work all the time because it divided the member variable handling asynchronous send and receive and others among them.

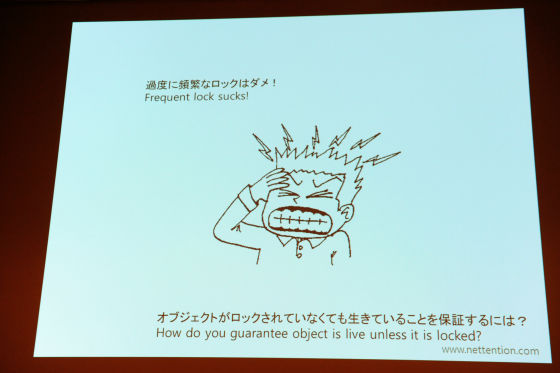

There are also things you need to think more before hitting the female. There is a problem that performance may be deteriorated if too much lock is done.

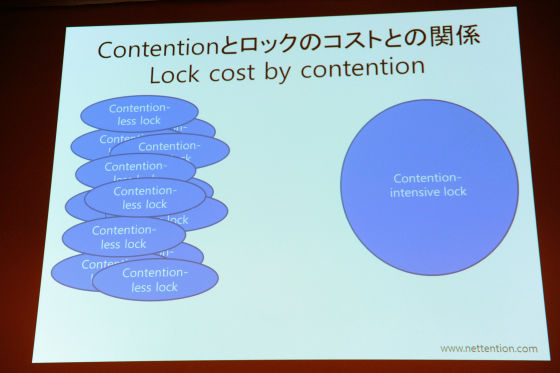

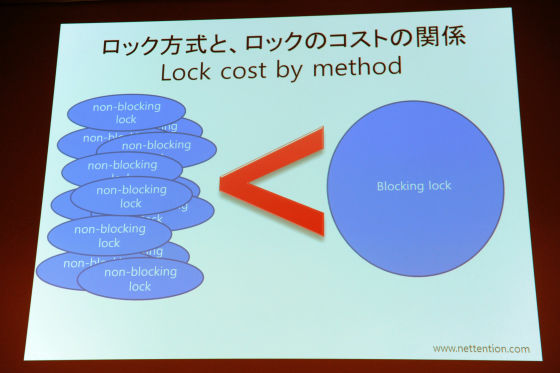

How to solve it? And second, how do you guarantee that the object will not disappear without locking the object? That is the answer, but as to whether it is OK to lock frequently, this is not okay. Generally it is not good. However, there are criteria when it is dangerous and when it's okay. Please look at this picture.

The left side is a frequently locked case, while the right side is the one locked only once. The difference between this left and right is that there is no contention. There is almost no lock. The right side is a lock with a lot of contention. Whether it takes time or not, it usually takes longer on the right side. Because a lot of contention occurs on the right side, a lot of CPU cache sharing will occur for this, and a lot of context switches will also occur. Of course, the left side is not necessarily fast, but improving the performance of parallel processing is still on the left side.

Let's see another one.

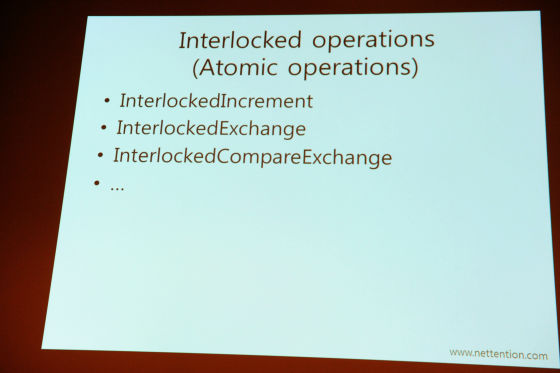

When thinking about locking method, there are two types of blocking lock and nonblocking lock. Blocking lock is a standby state, idle state when a thread is using resources. But with nonblocking locks, if another thread is already using the data, we declare the lock failed instead of waiting to lock it. Executes the next machine instruction without context switching. That is, nonblocking locks may fail, but there is the advantage that thread context switching does not occur. We made use of the techniques I mentioned earlier to improve parallelism, as well as improving the processing performance of the whole CPU. Because we kept context switching and contention to a minimum. Let's think about ways to guarantee that objects are not destroyed. Let's see the terms before that. "Atomic operations (Atomic operation) ", But this term is a way to do thread safe with simple arithmetic and operation without using a critical section.

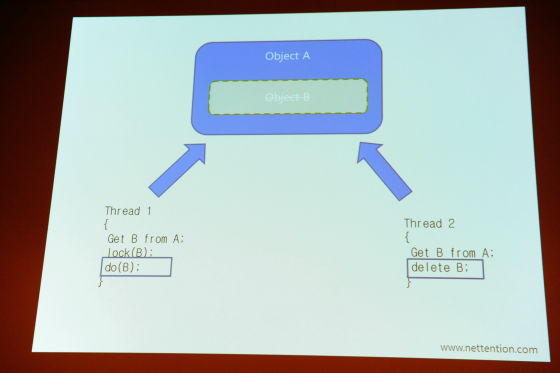

This is a hardware feature. Most CPUs support this function. For example, it is compare and swap that adds another value when it has addition or addition, but it seems not to be a special thing, but in high performance multicore programming this I place great importance on it. Let's see. There are objects protected by the critical section. Let's assume that this thread is already locking a thread. The object was destroyed by another thread. And what happens if other thread accesses that object will crash the server. And everyone must go to the office by taxi at high speed in the middle of the night. If you say what to do with this, please look at here.

In order to access B, you must first obtain the address of B. In order to get the address of B you need to access A before accessing B. So you have hints here. It sees thread 1, thread 2. And object A. There is another object B in object A. For Thread 1 to access it checks that this B is in use. And lock B and use it. And after work is finished I unlock B. It is a display of whether to use or not, but it is used as a condition for destroying the object B in thread 2. If there is an indication that it is already being used by another thread, I will not erase it, but if there is an indication that no one is using it, I can erase it. The important thing here is that you need to use Atomic operations when dealing with this display. Let's look at the explanation so far in consolidation operation.

In order to access B, you must first obtain the address of B. In order to get the address of B you need to access A before accessing B. So you have hints here. It sees thread 1, thread 2. And object A. There is another object B in object A. For Thread 1 to access it checks that this B is in use. And lock B and use it. And after work is finished I unlock B. It is a display of whether to use or not, but it is used as a condition for destroying the object B in thread 2. If there is an indication that it is already being used by another thread, I will not erase it, but if there is an indication that no one is using it, I can erase it. The important thing here is that you need to use Atomic operations when dealing with this display. Let's look at the explanation so far in consolidation operation.

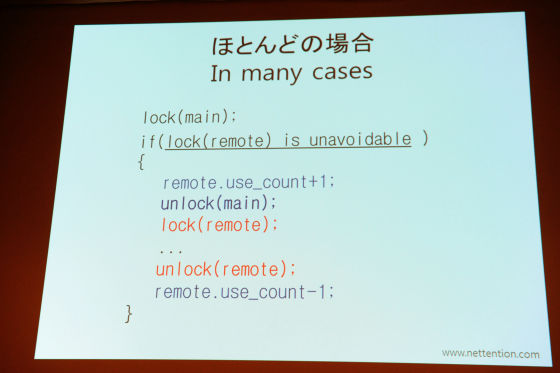

First lock the main, then look for the remote that the main is about to use and upload that USE COUNT one. Then I will cancel the main. Then lock the remote, use this remote once you finish using all of the remote, reduce USE COUNT by one. The plan was organized in this way by dividing the remote into resources and squeezing the main. But the plan is wonderful, but we have to rework the engine. Everyone, I do not like reviewing the document, neither I nor I really hate it, but it is no longer losing sight of the way by making this design document properly. So I will lock it from the main. I locked from the main, so it's over. But if you have to access a remote object after this main, you have to do this.

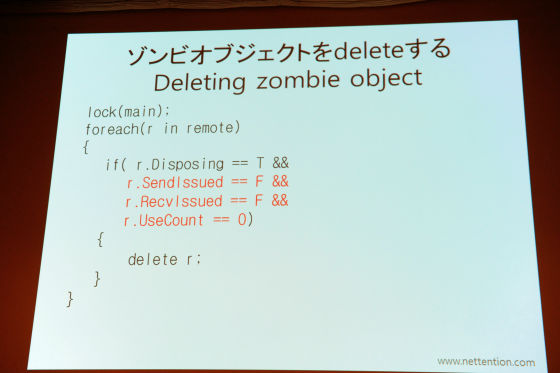

What is important here is to release the main lock and lock the remote, but this process must be included. If you do not do this, the performance of all servers will fall. Let's see the routine that destroys the remote.

I mentioned how to guarantee that it will not be destroyed with USE COUNT earlier, but two conditions are necessary to prevent objects from being destroyed. First of all, you must destroy the object with the main locked. The second point is that objects must be destroyed only when USE COUNT is completely empty. If the object could not be destroyed, you must do this later. If you follow the game server, you often send the same message to many different clients, are not you? So in this process we have to circulate several remote objects. Many problems also occur at this time. This is because a lot of context switching and contention occur frequently. So what shall we do with this?

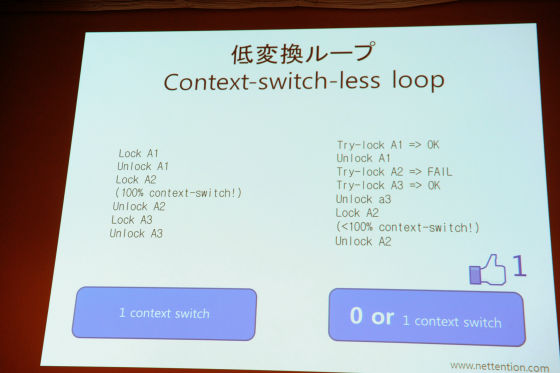

When you go to the bank you guys first take a number tag. Take this and do what until your turn. Of course you can wait forever, but you can do other errands as well. I can go to the toilet and I can also mess with cell phones. You can also have a cup of coffee. So, if you are in turn, you can do business with the bank. The program is the same, and if you have to wait for locking, the performance will be low. So it is not just to wait as it is, but if you do other things you can improve performance. If the remote object can not be locked, it will give up and attempt another lock on the remote object. And I can see other errands in the meantime. Objects that could not be locked a while ago should be locked again. In this way, the thread does not become the wait time, that is, context switching does not occur, so you can process many objects sequentially. I call this a low conversion loop.

How is this low conversion loop used? If you send the same message to a large number of clients, you can use it in multicast. ProudNet sends packets collectively every 5 milliseconds or less, but this is called coalescence. It is similar to the Nagle algorithm, but it is controlled manually. This is done to eliminate the Scree window syndrome. Also, a good, funny effect also appeared, and although there was an asynchronous sending function earlier, the number of calls this time is reduced, so the performance of the server will be a little better. It's a bit difficult content, it's not easy, so please imagine everyone in your mind while listening. I will be sleepy if I can not follow it. Well, continue. Let's assume that asynchronous transmission completion signal comes out, what should you do?

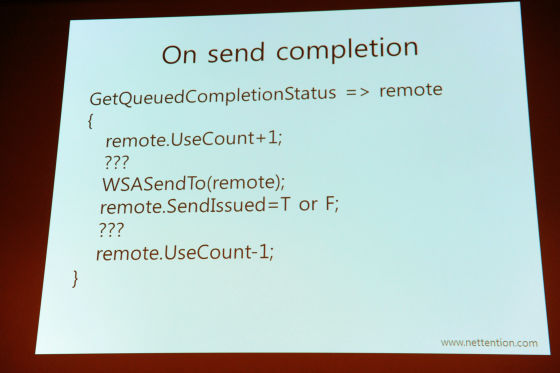

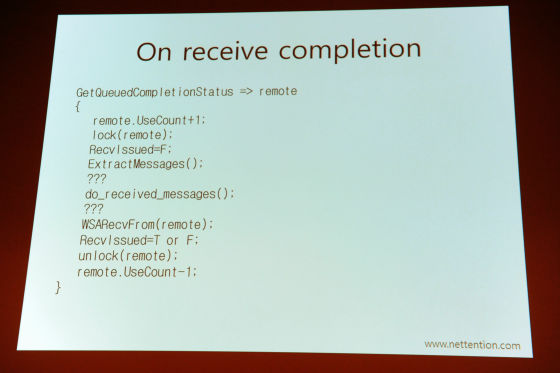

Yes, the function on the first line of the first line, this is a function that waits for an event in IOCP.

Then you can handle the events related to completion. Even when using Paul or K9, IOCP is slightly different, is not it? It is a pro actor pattern, so K9 and events are reactor patterns. But the goal is the same. In order to improve this performance we made it possible to obtain the completion status, GQCS straight from the address of the remote object. This routine is called most frequently, so you should not lock the main. If you lock it, the performance of the server suddenly drops. However, there is an error in this code. Could you please tell me what kind of error or error? Where is the error? I will give you a hint, this is the part of this blank. With this question mark, something must come in here.

Let's see the answer. Here you need to lock the remote and unlock it. I explained a little while ago. Now, looking at the reception completion process, it is almost the same as the case of sending. This code also has an error somewhere. I need something at this question mark, what is it? Giving hints, if there is no such two, the server will operate and a deadlock will occur halfway.

Then, the answer. It is necessary to lock and unlock processes. I need the process of receiving a message, locking the main, and locking the remote. Locking the main and then locking the remote is important, and it is not good if these two processes are gone. What will happen if you lock the remote first, that is, reverse the order? What is the phenomenon that the server programmer dislikes second. That's right, a deadlock occurs. So, these two tasks are necessary, otherwise the phenomenon occurs such that the server goes down in the middle.

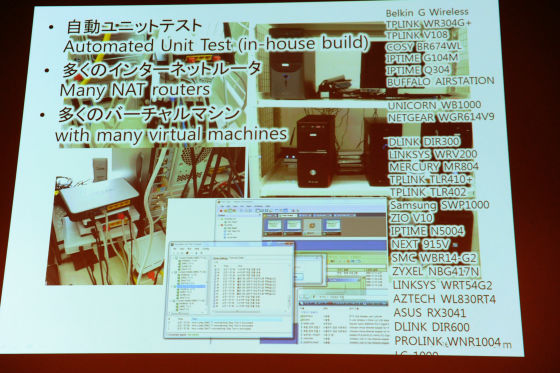

Now, the difficult story has ended so far. Please let me drink a little. I made coding in this design document. I went smoothly and I also found some problems for improving performance, but I could solve it without difficulty. Because we already had a lot of know-how. So the work after this coding was not difficult at all. I also checked the code profiler from time to time while coding. There is a problem with this engine which works on the server and the network. In other words, if a bug occurs, it is fatal, but that bug is hard to see. Of course, when you build a game server, it seems so, but when we make a server engine, we have to do a thorough test. Fortunately, we had a program for testing that we had previously made, and we had hardware for testing. I also had programs for various unit tests and had test programs for MMO games or P2P casual games or test programs for FPS games. Also, I used a lot of shared devices, test computers, Ethernet cards and so on. I connected five or more Ethernet ports to one computer. And all test cases were made in parallel at the same time. We also made regression tests possible. I also had various scenario tests so-called automation. And we proceeded with development until all tests were cleared.

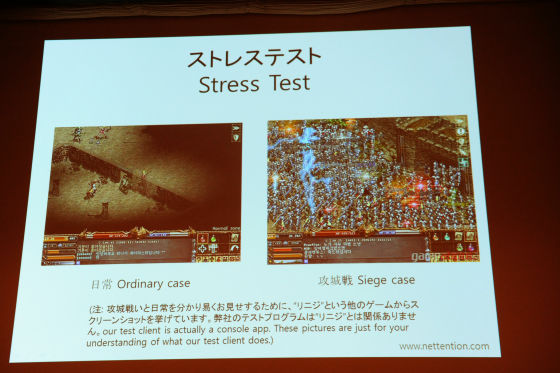

I also need a stress test. We thought of two test cases. It is assumed that it will be processed by one server instance. And all the players will not stop, keep moving all the time. The server gets information on the position and speed of the player from each client and multicasts to other players in the surrounding area. P2M communication may be mixed even in MMO, so here we have the function of lowering the load on the server, but we did not use that function. Because you do not know how much load is applied to the server if you use that function. So the server used Xeon with 8 cores, 2 computers CPU.

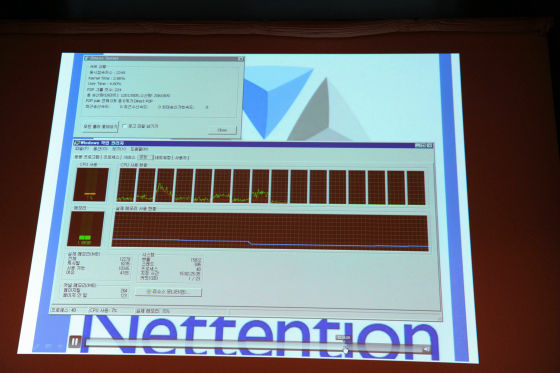

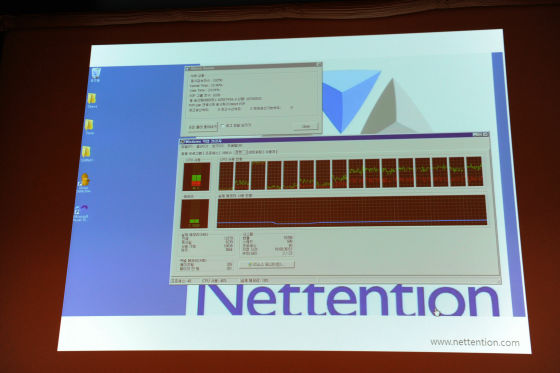

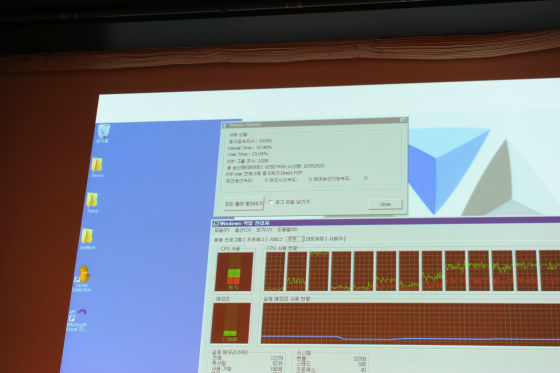

Let's see what we have done so far with Before after. The game server process using our server engine was a heavy, tight circumstance, even at the same time simultaneous access 3000. The ratio of using one CPU was not so high. Let's see it.

The number of concurrent users is increasing, but the CPU usage rate is low. So we did this work. Let's see the situation of after-sales.

The number of concurrent users has increased. It is one game server instance. CPU utilization can also be seen here. In the MMORPG game, players are walking around. The number of concurrent users has grown more and more, as you can see, simultaneous access has exceeded 10,000 people.

But there is no rack. It is one server instance. As you can see, the CPU utilization rate is roughly about half. Do you see strange things here? Only one CPU has a very high utilization rate. Why is this because I am doing device interrupt handling in the kernel, but that is because only one CPU can do it. This machine consists of two NUMA nodes. So to use the performance of this machine you will need two server processes. Then it means that up to 20,000 simultaneous connections are possible with this computer. We took the general of the enemy. But it is not the end here. Sub, deputy general became general, did not he? In other words, the thing that was sub - sub so far became the first problem. Then you have to take down this leader as well. I was clearing the problem in this way and I increased the performance of the server.

Confessioning one thing, it is not important that the maximum number of concurrent users who enter the server during development of the game server, this is how many. It can be solved in most cases by increasing the number of servers. However, we still have to assume the worst situation. Everyone knows that it takes a lot of money to develop one online game, but the game server is unstable and I can not provide the service even though I made it all the time, this is the condition I should avoid. So when you create a game server you must assume the worst case, the worst case.

The second point is what will happen if the game server's computer can handle a large number of concurrent users conversely? In other words, the game server that processes concurrent users can do other jobs. What I mean is that you can include programs that allow programmers to do a lot of work with game servers. The advantage of giving the game server a lot of roles, for example, can move the logic of the game to the server rather than the client. Then it is possible to protect this from hackers.

Then here is a summary (summary). Multi-core scale out, this is very difficult and you have to start carefully. Small mistakes are not allowed either. Network / server programming is a program in which I / O of the device is very involved, and there is a lot of interaction because it is one-to-many. There is a high possibility that one object is not one-to-one with the client, but several objects are involved. There are also a lot of device I / Os. So there are various tools for multicore programming. For example, parallelization libraries or parallel tools. There is also a problem that when you use such things, usage rate drops again. However, in order to maximize the performance of the server, it is necessary to utilize every corner of the hardware, understand the operation system, and create a game server accordingly so that the performance can be maximized I will.

Let's organize here. Even on the game server you are building, context switching should be minimized. And we must also reduce the contention. It is necessary to reduce the call of the kernel API as much as possible. And cache hit rate, this must also be taken into consideration. Unfortunately, in the field of multicore programming, there is no such thing as a perfect royal road, and I have to practice it. Please learn much about multicore programming on a daily basis and carefully keep in mind what scenes you will encounter in the process of development. And study hard. By doing so you can make everyone's strategy here. I think it would be nice if you could refer to my today's story little by little. that's all. Thank you very much.

We will accept any questions. here you are First of all, I will have a microphone so I'd like to talk with a microphone.

Question:

Among the things I talked about in a while ago, there was a broadcast, or broadcast, I also have a socket server once. It's a test level though. In order to broadcast to ten thousand users, I sometimes worried about how to do early broadcasting. At that time I thought I'd like to create a thread with 100 client units and broadcast it, but, for example, 100 threads full of 100 objects, but that's not a bad way from the performance point of view Cow.

Interpretation:

First of all, it is important whether 100 object threads operate at device time or CPU time. If 100 threads are all CPU time, an extraordinary amount of context switching will occur. In that case performance will be messed up. But if this 100 thread has reasonable reason for having device time, it does not matter if there are 100 threads. So I think that we need to ask you a little bit more about how to broadcast on 100 threads, but as a basic guideline, this 100 threads will have CPU time at the same time That is to say. In addition, there is a principle of CPU time, the number of threads that operate on CPU time should not exceed the number of CPUs. Basically it is. But normally you do not even have a CPU of 100. So check first whether it is CPU time or device time. Then, if it is CPU time, it is important to reduce the number of threads. Performance will increase if the number of threads is small.

Question:

I think that it can be said commonly in the case of a multicore program regardless of the server, but although I told that it is a great point to divide the previous processing into a place to squeeze and a place not to squeeze the processing, When you found a way of dividing, was there anything else?

Interpretation:

Well ... I first used the code profiler to search for the late part. Parts to be executed late. Then look for the part with reduced parallelism. Intel'sParallel Studio (parallel studio)In the case of a program like, there is a function to look for parallelism. Since we did not know how to use it at that time, we used the Visual Studio profiler and GlowCode, firstly looking for places where bottlenecks are anticipated. So, it analyzed which area locked and those areas do not lock which part takes long execution time. As a result, I sketchbook ... I drew the drawing on a big paper. I analyzed which routines locked up to what extent and which routines unlocked to what extent. Then I drew a map on the paper and found out what squeeze I had to do. The squeeze technique itself was not found in books or found on the Internet, but I found it on the way. There may be other methods like this elsewhere, but this squeeze is said to be the technique I figured during development.

Question:

Thank you very much.

Moderator:

Do you have any other questions?

Question:

It was interesting. I think that ProudNet seems to be a server framework that runs on the Windows OS, but as I mentioned in the talk, the core of the CPU that can handle network interrupts is one in the past There was certainly a problem that it was restricted. Actually speaking, as we increase the degree of parallelism, we believe that the fact that the number of cores that can handle network I / O can be reduced is probably a bottleneck itself, and Linux I think that there is also a story that probably solves the problem of probably even if it is a version, but also whether Windows has some merit in terms of building a server system on the OS, after all I'd like to tell you if I should use the Windows OS for a long time or if there are technical prospects for it.

Interpretation:

Thank you for your wonderful question. Linux already provides it, itTeamingThat is what I'm saying. It is a technique that is said to be network interface teaming. This is in Linux. I have already made this so that it will not gather on one CPU. There is a rumor that this teaming technology will be incorporated in Windows server 2012 though Windows is currently in beta version. I am also very interested in what it will be like and what shape it is. Teaming is not supported in the current version of Windows. Therefore, there is a problem that use concentrates on one CPU, and how to solve it? As one, I put some network cards in the server. Then go into the Windows control panel and set the CPU that can be used for each device driver. If you then make the game server multihomed networking, you can make distributed processing to various CPUs. But this is a bit tricky, is not it? I can not expect a job like teaming up.

And when we talk about the version of Linux, we are currently making the version of Linux version. Once in Korea we used Windows almost when we made game server. There are several reasons why it was Windows, but first it was easy to share the source code with the game client, and that the starcraft could be turned around by the game server . But now the times have changed. There are a lot of users using iPhone and Android users. So, some people are developing game servers with ESCODE. Escor is the foundation and base of Pleebias. So the game made with esc code will move on the base of Pleebias. You may also use Android etc. For this reason it is not necessary to stick to Windows anymore, so more people are making games on Linux. We also offer cloud services on Amazon based on Amazon or Korea's LGU Cloud, and Linux based on these places. It's also very cheap and half the price of Windows. Therefore, some people use Linux for this maintenance fee and maintenance fee. However, there are more important things than performance problems, whether you use Windows or Linux, and that is how much you can use it. I think that you should choose the one that is easy to use. No matter how good the server is, if the stability shakes you will not talk. So it can be said that it is smarter to choose a server based on this whether it is easier for you to do on Windows and for Linux, so that you can control the bug as soon as a bug occurs . Did you answer?

Question:

Thank you very much.

Moderator:

So it is time, so I'd like to end it with this. Thank you very much.

Related Posts: