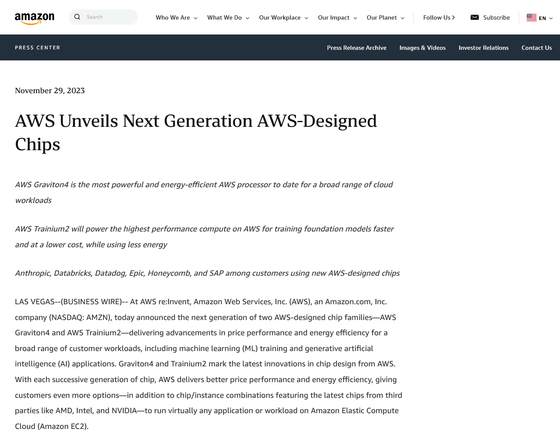

Amazon announces processor 'Graviton4' and AI training chip 'Trainium2' for AWS

At the conference ``

AWS-Unveils-Next-Generation-AWS-Designed-Chips

https://press.aboutamazon.com/2023/11/aws-unveils-next-generation-aws-designed-chips

Join the preview for new memory-optimized, AWS Graviton4-powered Amazon EC2 instances (R8g) | AWS News Blog

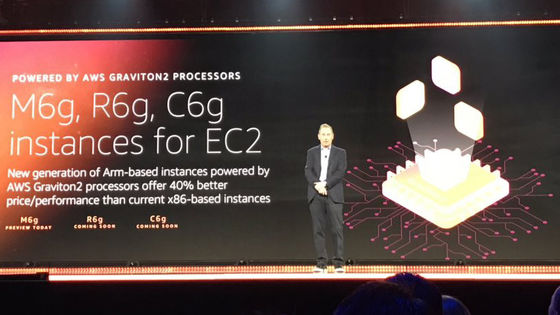

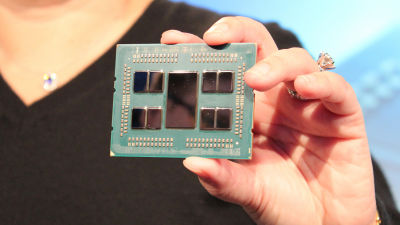

AWS offers more than 150 different Graviton-based Amazon EC2 instances, and with more than 2 million Graviton processors, more than 50,000 customers, including F1, Nielsen, Pinterest, Stripe, and more, run a variety of workloads. Running.

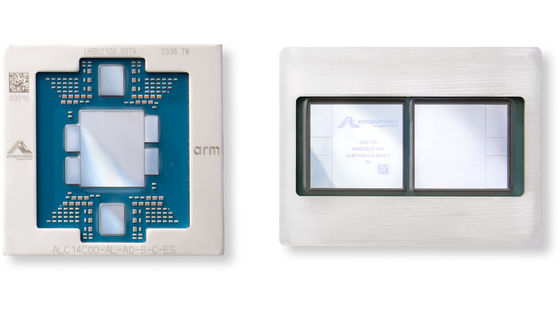

As large-scale in-memory databases and analytical workloads are introduced to the cloud, the demands become increasingly demanding. Graviton 4, the successor processor toGraviton 3 announced in November 2021, clears this.

Graviton4 has 50% more cores and 75% more memory bandwidth compared to Graviton3, delivering up to 30% more compute power and the highest performance for a wide range of workloads running on Amazon EC2. It is said to achieve cost performance and energy efficiency.

Graviton4 is available on R8g instances , a new memory-optimized instance that improves memory-intensive workloads such as high-performance databases, in-memory caching, and big data analytics. Additionally, security is improved by fully encrypting all high-speed physical hardware interfaces.

R8g instances deliver up to 3x more vCPUs and 3x more memory compared to current R7g instances, resulting in faster time-to-results and final results when running workloads. It is expected that this will lead to cost reductions.

Graviton4-based R8g instances are in preview and are expected to be generally available in the coming months.

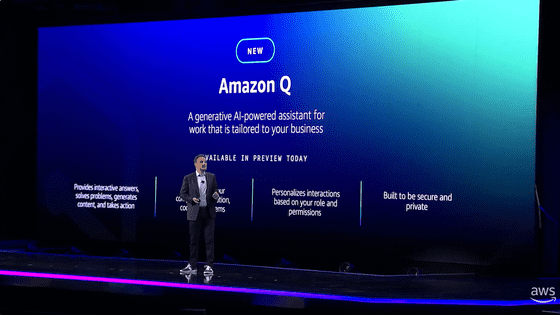

On the other hand, Trainium2 is the latest version of the machine learning chip 'Trainium', and training is up to 4 times faster than before. Trainium2 is available on Amazon EC2 Trn2 instances, with 16 Trainium2 chips in a single instance. Trn2 instances use EC2 UltraCluster to scale up to 100,000 chips and interconnect with AWS Elastic Fabric Adapter (EFA) petabit-scale networking for supercomputer-class performance of up to 65 EFlops per second. It is intended to do so. It is said that by using this mechanism, training a large-scale language model with 300 billion parameters, which previously took several months, can be reduced to a few weeks.

In addition, since basic models and large-scale language models can be trained in a short amount of time, energy efficiency has been improved by up to two times.

Availability of Trainium2-based Trn2 instances has not been announced.

Related Posts:

in Hardware, Posted by logc_nt