A new function "Google Lens" that displays information of the subject reflected in the camera and allows translation and Wi-Fi password recognition is announced

A new function which tells the user by recognizing that "When in front of the eyes the camera is turned to the subject"Google Lens"But,Google I / O 2017It was announced in.

What is Google Lens like? The explanation is that it is possible to see from around 1 hour 1 minute 45 seconds about how the actual use of Google Lens is around 47 minutes and 50 seconds of the following movie.

Google I / O '17: Google Keynote - YouTube

New feature "Google Lens" announced at Google I / O 2017. This is first implemented in Google Assistant and Google Photos.

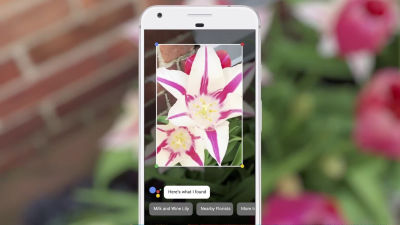

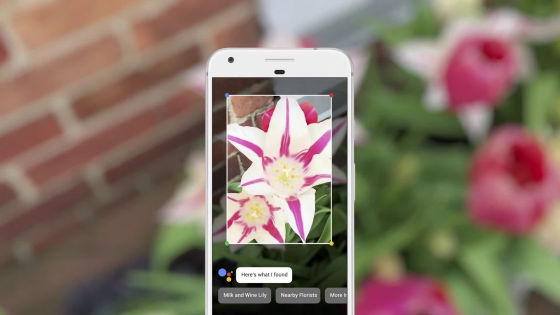

For example, when a smartphone camera is pointed at a flower, the camera recognizes the shape of the flower ......

By saying "This is what I found", candidates for what the subject is is displayed on the display.

Furthermore, turning the camera to the Wi-Fi network name and password ... ...

Automatically recognize network name and password. Simultaneously, the choices "NO THANKS" "COPY TEXT" "CONNECT" are displayed, so select "CONNECT".

Then I was able to connect to Wi-Fi with a single shot. It will no longer be necessary to type in while looking over the password and smartphone's display over and over again.

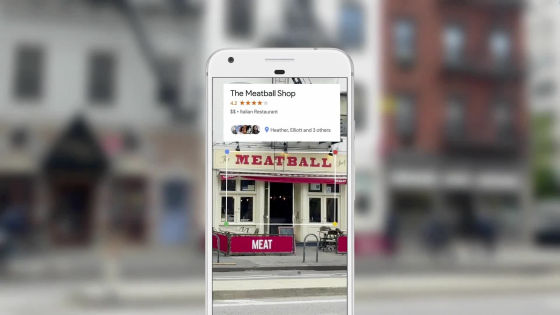

In addition, even shops that happen to happen while walking on the road will display the store's information on the display just by turning the camera.

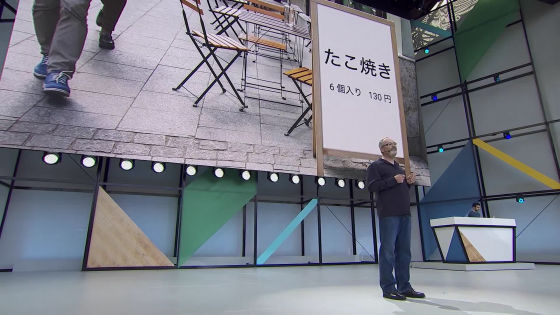

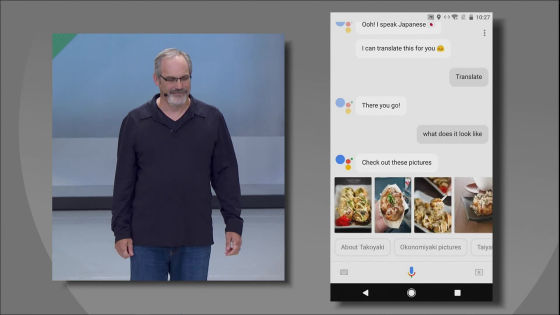

In addition, we actually demonstrated Google Lens on the platform. For example, suppose a person from the US is walking in Osaka on a trip, and finds a sign of "Takoyaki 6 pieces 130 yen."

When turning the camera, "I can speak Japanese! Let's translate", so tap "Translate this".

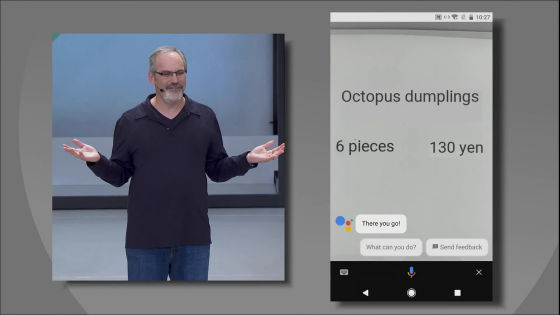

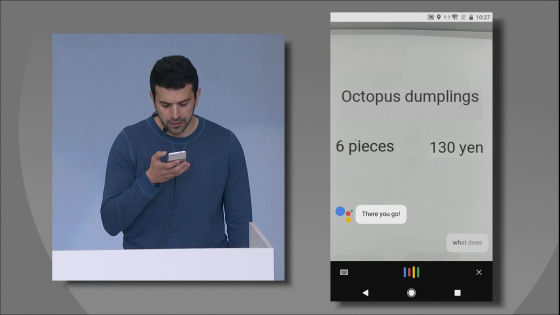

Then, the display changed to "Octopus dumplings 6 pieces 130 yen".

Asking the Google Assistant "What does that look like?" ... ...

It displays multiple matches with the word Takoyaki. The user never hits the word "takoyaki", so smartphones visually tell "what it is" in the conversation.

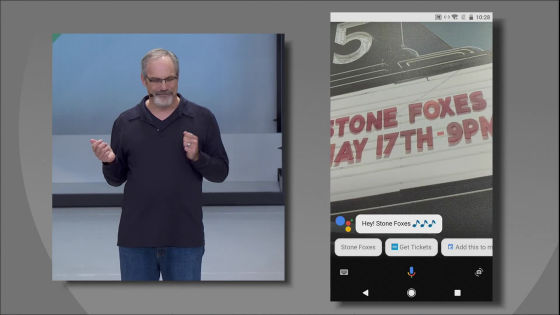

Also, Google Lens recognizes "what is captured by the camera" and presents candidates for tasks that match the subject. For example, if you point the camera to an event notice, you will be presented with the suggestion of "getting a ticket" or "registering an appointment in a calendar".

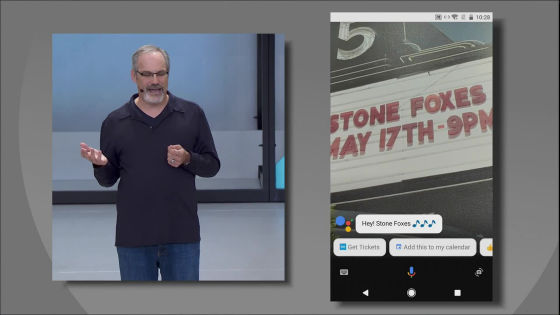

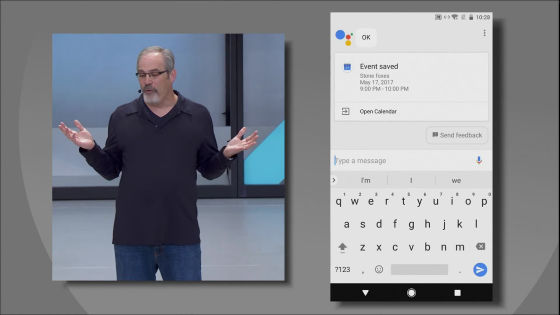

Tap "Register schedule to calendar" ... ...

With this feeling, the schedule is registered without user's hard work.

Please note that Google Lens is initially implemented in Google Assistant and Google Photos and will be used later in other projects as well. It does not mention when the function is implemented, but it seems to be available within a few months.

Related Posts: