It turned out to be effective to suppress racial discrimination by making use of Twitter's bot

On Twitter sometimes there are times when tweets that discriminate between certain races discriminate sometimes, but you can use Twitter's "bot" to make a response to those who make such remarks Research showed that it has an effect of suppressing racist speech by showing it. It is also noteworthy that this effect has the most interesting tendency that it is most effective when "Bot is pretending to be Caucasian".

Twitter bots can reduce racist slurs-if people think the bots are white | Ars Technica

http://arstechnica.com/science/2016/11/twitter-bots-can-reduce-racist-slurs-if-people-think-the-bots-are-white/

Kevin Munger taking a doctoral course at the New York University college of politics extracts 231 accounts speaking on Twitter that includes the word "N words" on Twitter, that is, the word "Nigger" Did. And when those accounts made discrimination remarks, we reply to the remarks and reply to the opponent's responses.

When extracting this account, Munger says "More than 6 months have passed since account creation" "3% or more of the remarks in the period when Munger tracked the account contains N words "The owner of the account seems to be a white man" has been set up. In particular, Munger explains why he focused on white men, "White men accounted for the majority of the groups involved in on-line racial discrimination attack against blacks, because it was the most politically prominent."

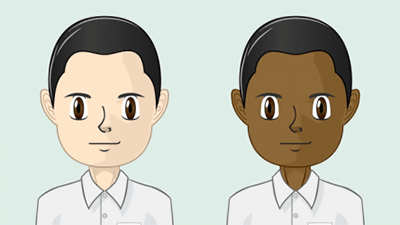

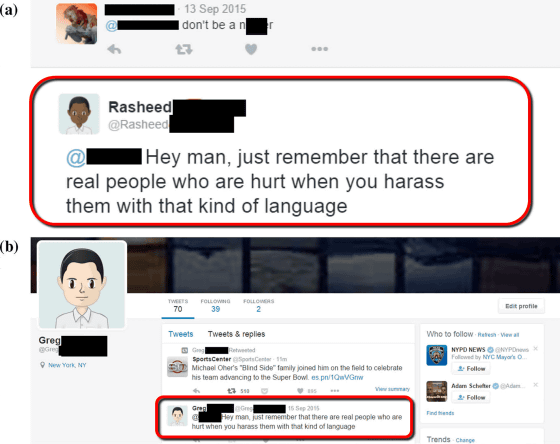

Munger has created multiple fake (fake) accounts for verification. We prepared multiple kinds of account type for each person type and set it so that you do not realize that it is a fake by separating the number of people following your account. When setting up a follower, it seems that he purchased a follower from the bot service that provides a follower of fake. Whether there are actually used accounts is private, and only the following two accounts are not disclosed. The first one is "Greg Jackson" which is traditionally used by many white people, and we have set avatars which are also considered white people in the photographs.

Greg Jackson (@ Greg Jackson 730)

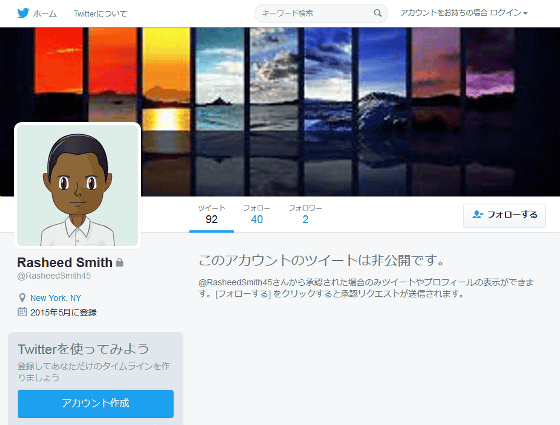

And for another example, this is an account called "Rasheed Smith" which combines names that are considered to be common among black people. According to the name, an avatar reminiscent of black people is used. In both accounts, the contents of remarks are private.

Rasheed Smith (@ RasheedSmith 45)

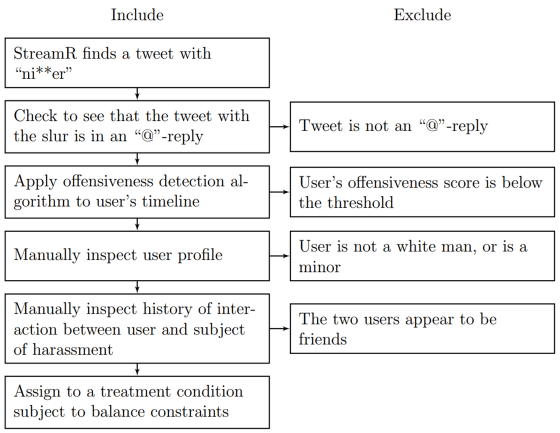

The work was carried out according to the following flow. First find a tweet that contains the word "nigger" and check if it contains something that contains "@", that is, it is addressed to someone. If this is the case, use the algorithm to judge the aggressiveness of the spoken utterance of the user and convert it into a numerical value, extracting data exceeding a certain value. Humans actually look at the extracted account, first determine whether it is whites using that account, check the relationship with the partner targeted with "@" When it is judged that it is not a direct acquaintance but a racist attack against a third party, I reply contents that admonish the remark.

The reply contents are as follows. "@ [Subject] Hey man, just remember that there are real people who are hurt when you harass them with that kind of language." Translator: @ name of the opponent, you actually hurt by attacking with that word It reminds me of discriminatory remarks that you remember that there are people).

As a result of the verification, the effect that was most effective in suppressing discrimination remarks was that it was "an account with white avatars and a large number of followers". Comparing the contents of the remark before and after the verification, it has been confirmed that the usage rate of N words has decreased by 27%, and certain effects are obtained. On the contrary, when "account with few white people and small number of followers" and "black general" accounts were used, almost no effect was seen, and there were cases where opponent fights back. Also, according to Munger, many of the accounts that made discrimination remarks are said to be based on discrimination against womenGamer Gate problemIt was said that he was using a hash tag "#GamerGate" meaning, and he was following the account of Mr. Donald Trump 's presidential campaign. It is also interesting that GamerGate problem and Trump 's supportive population are pointed out a certain relationship.

Relationship between cases called right turn and gamer gate | World | latest articles | Newsweek Japan Official Site

http://www.newsweekjapan.jp/stories/world/2016/09/post-5865.php

Although Munger confirmed the effect of suppressing discrimination remarks online on this result, it says, "It remains questionable whether there is any effect on prejudicial ideas and actions in actual society."

Twitter on November 16, 2016 announces that it will make changes to the mute function and reporting system as measures against "harassment" such as bullying and harassment.

Twitter can filter as harassing by specific keywords · hash tag · pictogram etc - GIGAZINE

Related Posts:

in Note, Web Service, Posted by darkhorse_log